Deploy a Dockerized Application to Azure Kubernetes Service using Azure YAML Pipelines 4 – Running a Dockerized Application Locally

This is the fourth post in a series where I'm taking a fresh look at how to deploy a dockerized application to Azure Kubernetes Service (AKS) using Azure Pipelines after having previously blogged about this in 2018. The list of posts in this series is as follows:

- Getting Started

- Terraform Development Experience

- Terraform Deployment Pipeline

- Running a Dockerized Application Locally (this post)

- Application Deployment Pipelines

- Telemetry and Diagnostics

In this post I explain the components of the sample application I wrote to accompany this (and the previous) blog series and how to run the application locally. If you want to follow along you can clone / fork my repo here, and if you haven't already done so please take a look at the first post to understand the background, what this series hopes to cover and the tools mentioned in this post. Additionally, this post assumes you have created the infrastructure—or at least the Azure SQL dev database—described in the previous Terraform posts.

MegaStore Application

The sample application is called MegaStore and is about as simple as it gets in terms of a functional application. It's a .NET Core 3.1 application and the idea is that a sales record (beers from breweries local to me if you are interested) is created in the presentation tier which eventually gets persisted to a database via a message queue. The core components are:

- MegaStore.Web: a skeleton ASP.NET Core application that creates a ‘sales' record every time the home page is accessed and places it on a message queue.

- NATS message queue: this is an instance of the nats image on Docker Hub using the default configuration.

- MegaStore.SaveSaleHandler: a .NET Core console application that monitors the NATS message queue for new records and saves them to an Azure SQL database using EF Core.

When running locally in Visual Studio 2019 these application components work together using Docker Compose, which is a separate project in the Visual Studio solution. There are two configuration files in use which get merged together:

- docker-compose.yml: contains the configuration for megastore.web and megastore.savesalehandler which is common to running the application both locally and in the deployment pipeline.

- docker-compose.override.yml: contains additional configuration that is only needed locally.

There's a few steps you'll need to complete to run MegaStore locally.

Azure SQL dev Database

First configure the Azure SQL dev database created in the previous post. Using SQL Server Management Studio (SSMS) login to Azure SQL where Server name will be something like yourservername-asql.database.windows.net and Login and Password are the values supplied to the asql_administrator_login_name and asql_administrator_login_password Terraform variables. Once logged in create the following objects using the files in the repo's sql folder (use Ctrl+Shift+M in SSMS to show the Template Parameters dialog to add the dev suffix):

- A SQL login called sales_user_dev based on create-login-template.sql. Make a note of the password.

- In the dev database a user called sales_user and a table called Sale based on configure-database-template.sql.

Note: if you are having problems logging in to Azure SQL from SSMS make sure you have correctly set a firewall rule to allow your local workstation to connect.

Docker Environment File

Next create a Docker environment file to store the database connection string. In Visual Studio create a file called db-credentials.env in the docker-compose project. All on one line add the following connection string, substituting in your own values for the server name and sales_user_dev password:

|

1 |

DB_CONNECTION_STRING=Server=tcp:yourservername.database.windows.net,1433;Initial Catalog=dev;Persist Security Info=False;User ID=sales_user_dev;Password=yourpassword;MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30; |

Note: since this file contains sensitive data it's important that you don't add it to version control. The .gitignore file that's part of the repo is configured to ignore db-credentials.env.

Application Insights Key

In order to collect Application Insights telemetry from a locally running MegaStore you'll need to edit docker-compose.override.yml to contain the instrumentation key for the dev instance of the Application Insights resource that was created in the the previous post. You can find this in the Azure Portal in the Overview pane of the Application Insights resource:

I'll write more about Application Insights in a later post but in the meantime if you want to know more see this post from my previous 2018 series. It's largely the same with a few code changes for newer ways of doing things with updated NuGet packages.

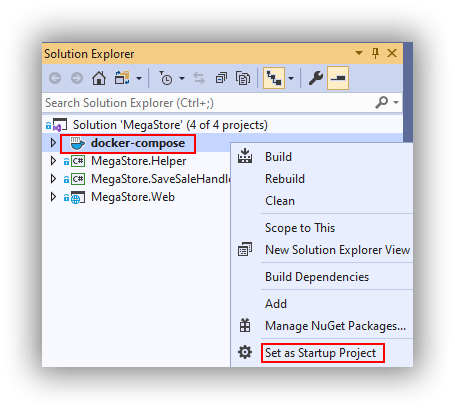

Set docker-compose as Startup

Up and Running

You should now be able to run MegaStore using F5 which should result in a localhost+port number web page in your browser. Docker Desktop will need to be running however I've noticed that newer versions of Visual Studio offer to start it automatically if required. Notice in Visual Studio the handy Containers window that gives some insight into what's happening:

In order to establish everything is working open SSMS and run select-from-sales.sql (in the sql folder in the repo) against the dev database. You should see a new ‘beer' sales record. If you want to create more records you can keep reloading the web page in your browser or run the generate-web-traffic.ps1 PowerShell snippet that's in the repo's pipeline folder making sure that the URL is something like http://localhost:32768/ (your port number will likely be different).

To view Application Insights telemetry (from the Azure Portal) whilst running MegaStore locally you may need to be aware of services running on you network that could cause interference. For me I could run Live Metrics and see activity in most of the graphs, however I initially couldn't use the Search feature to see trace and request telemetry (the screenshot is what I was expecting to see):

I initially thought this might be a firewall issue but it wasn't, and instead it turned out to be the pi-hole ad blocking service I have running on my network. It's easy to disable pi-hole for a few minutes or you can figure out which URL's need whitelisting. The bigger picture though is that if you don't see telemetry—particularly in a corporate scenario—you may have to do some investigation.

That's it for now! Next time we look at deploying MegaStore to AKS using Azure Pipelines.

Cheers -- Graham