Deploy a Dockerized Application to Azure Kubernetes Service using Azure YAML Pipelines 6 – Telemetry and Diagnostics

This is the sixth post in a series where I'm taking a fresh look at how to deploy a dockerized application to Azure Kubernetes Service (AKS) using Azure Pipelines after having previously blogged about this in 2018. The list of posts in this series is as follows:

- Getting Started

- Terraform Development Experience

- Terraform Deployment Pipeline

- Running a Dockerized Application Locally

- Application Deployment Pipelines

- Telemetry and Diagnostics (this post)

One of the problems with running applications in containers in an orchestration system such as Kubernetes is that it can be harder to understand what is happening when things go wrong. So while instrumenting your application for telemetry and diagnostic information should be fairly high on your to do list anyway, this is even more so when running application is containers. Whilst there are lots of third party offerings in the telemetry and diagnostics space in this post I take a look at what's available for those wanting to stick with the Microsoft experience. If you want to follow along you can clone / fork my repo here, and if you haven't already done so please take a look at the first post to understand the background, what this series hopes to cover and the tools mentioned in this post.

Azure DevOps Environments

If you are following along with this series you may recall that in the last post we configured an Azure DevOps Pipeline Environment for the Kubernetes cluster. It turns out that these are great for quickly taking a peek at the health of the components deployed to a cluster. For example, this is what's displayed for the MegaStore.SaveSaleHandler deployment and pods:

It gets better though because you can drill in to the pods and view the log for each pod. This is the log from the message-queue-deployment pod:

Of course, pipeline environments only really tell you what's going on at that moment in time (or maybe for the previous few minutes depending on how busy the logs are). In order to capture sufficient retrospective data to be useful requires the services of a dedicated tool.

Application Insights

From the docs: Application Insights, a feature of Azure Monitor, is an extensible Application Performance Management (APM) service for developers and DevOps professionals. When using it in conjunction with an application as we are here there are several configuration options to address. I describe an overview below, but everything is implemented in the sample application here.

Instrumentation keys

When using Application Insights with an application that is deployed to different environments it's important to take steps to ensure that telemetry from different environments is not mixed up together. The principal technique to avoid this is to have separate Application Insights resource instances which each have their own instrumentation key. Each stage of the deployment pipeline is then configured to make the appropriate instrumentation key available and the application running in that stage of the pipeline sends telemetry back using that key. The Terraform configuration developed in a previous post created three Application Insights resource instances for each of the environments the MegaStore application runs in:

When working with containers probably the easiest way to make an instrumentation key available to applications is via an environment variable named APPINSIGHTS_INSTRUMENTATIONKEY. An ASP.NET Core application component will automatically recognise APPINSIGHTS_INSTRUMENTATIONKEY—in other components it may need to be set manually. The MegaStore application contains a helper class (MegaStore.Helper.Env) to pass environment variables to calling code.

Server-Side Telemetry

Each component of an application that is required to generate server-side telemetry at the very least needs to consume one of the Application Insights SDKs as a NuGet. The MegaStore.Web ASP.NET Core component is configured with Microsoft.ApplicationInsights.AspNetCore and the MegaStore.SaveSaleHandler .NET Core console application component with Microsoft.ApplicationInsights.WorkerService.

These components are configured with the IServiceCollection class. For a .NET Core application the code is as follows:

|

1 2 3 4 5 6 |

IServiceCollection services = new ServiceCollection(); services.AddApplicationInsightsTelemetryWorkerService(Env.AppInsightsInstrumentationKey); // more config here IServiceProvider serviceProvider = services.BuildServiceProvider(); |

For an ASP.NET Core application the code is similar however IServiceCollection is supplied via the ConfigureServices method of the Startup class.

Client Side Telemetry

For web applications you may want to generate client-side usage telemetry and in ASP.NET Core applications this is achieved through two configuration steps:

- In _ViewImports.cshtml add @inject Microsoft.ApplicationInsights.AspNetCore.JavaScriptSnippet JavaScriptSnippet

- In _Layout.cshtml add @Html.Raw(JavaScriptSnippet.FullScript) at the end of the <head> section but before any other script.

You can read more about this here.

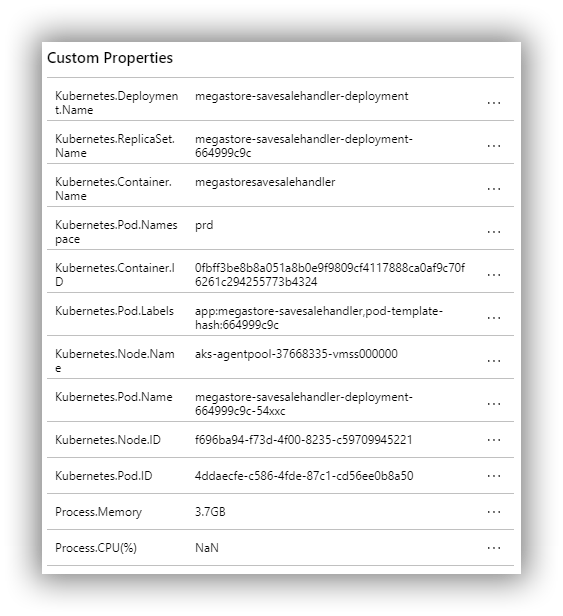

Kubernetes Enhancements

Since the deployed version of MegaStore runs under Kubernetes we can take advantage of the Microsoft.ApplicationInsights.Kubernetes NuGet package to enhance the standard Application Insights telemetry with Kubernetes-related information. With the NuGet installed you simply add the AddApplicationInsightsKubernetesEnricher(); extension method to IServiceCollection.

Visualising Application Components with Cloud Role

Application Insights uses an Application Map to visualise the components of a system. It will automatically name a component but it's a good idea to set this explicitly. This is achieved through the use of a CloudRoleTelemetryInitializer class, which you will need to add to each component that needs tracking:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

using Microsoft.ApplicationInsights.Channel; using Microsoft.ApplicationInsights.Extensibility; namespace MegaStore.Web { public class CloudRoleTelemetryInitializer : ITelemetryInitializer { public void Initialize(ITelemetry telemetry) { telemetry.Context.Cloud.RoleName = "MegaStore.Web"; } } } |

The important part in the code above is in setting the RoleName. A CloudRoleTelemetryInitializer class is configured via IServiceCollection with this line of code:

|

1 |

services.AddSingleton<ITelemetryInitializer, CloudRoleTelemetryInitializer>(); |

Custom Telemetry

Adding your own custom telemetry is achieved with the TelemetryClient class. In an ASP.NET Core application TelemetryClient can be configured in a controller through dependency injection as described here. In a .NET Core Console app it's configured from IServiceProvider. The complete implementation in MegaStore.SaveSaleHandler is as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

// Class-level declaration private static TelemetryClient _telemetryClient; IServiceCollection services = new ServiceCollection(); services.AddApplicationInsightsTelemetryWorkerService(Env.AppInsightsInstrumentationKey); services.AddApplicationInsightsKubernetesEnricher(); services.AddSingleton<ITelemetryInitializer, CloudRoleTelemetryInitializer>(); IServiceProvider serviceProvider = services.BuildServiceProvider(); _telemetryClient = serviceProvider.GetRequiredService<TelemetryClient>(); |

There are then several options for generating telemetry including TelemetryClient.TrackEvent, TelemetryClient.TrackTrace and TelemetryClient.TrackException.

Generating Data

With all this configuration out of the way we can now start generating data. The first step is to find the IP addresses of the MegaStore.Web home pages for the qa and prd environments. One way is to bring up the Kubernetes Dashboard by running the following code: az aks browse --resource-group yourResourceGroup --name yourAksCluster.

Now switch to the desired namespace and navigate to Discovery and Load Balancing > Services to see the IP address of megastore-web-service:

My preferred way of generating traffic to these web pages is with a PowerShell snippet run from Azure Cloud Shell (which stops your own machine from being overloaded if you really crank things up by by reducing the Start-Sleep value):

|

1 2 3 4 5 |

while ($true) { (New-Object Net.WebClient).DownloadString("http://51.11.9.183/") Start-Sleep -Milliseconds 1000 } |

As tings stand you will probably mostly get ‘routine' telemetry being returned. If you want to simulate exceptions you can change the name of one of the columns in the database table dbo.Sale.

Visualising Data

Without any further configuration there are several areas of Application Insights that will now start displaying data. Here is just a small selection of what's available:

Overview Panel

Application Map Panel

Live Metrics Panel

Search Panel

Whilst all these overview representations of data are very useful it is in the detail where things perhaps get the most interesting. Drilling in to an individual Trace for example shows a useful set of standard Trace properties:

And also a set of custom Trace properties courtesy of Microsoft.ApplicationInsights.Kubernetes:

Drilling in to a synthetic exception (due to a changed column name in the database) provides details of the exception and also the stack trace:

These are just a few examples of what's available and a fuller list is available here. And this is just the application monitoring side of the whole Application Monitoring platform. A good starting point to see where Application Insights fits in to the bigger Azure Monitoring platform is the overview page here.

That's it folks!

That's it for this mini series! As I said in the first post, the ideas presented in this series are not meant to be the definitive, one and only, way of deploying a Dockerized ASP.NET Core application to Azure Kubernetes Service. Rather, they are intended to show my journey and hopefully give you ideas for doing things differently and better. To give you one example, I'm uneasy about having Kubernetes deployments described in static YAML files. Making modifications by hand somehow feels error prone and inefficient. There are other options though, and this post has a good explanation of the possibilities. From this post we see that there is a tool called Kustomize and then we see that there is an Azure DevOps Kubernetes manifest task that uses Kustomise. I've not explored this task yet but it looks like a good next step to understand how to evolve Kubernetes deployments.

If you have your own ideas for evolving the ideas in this series do leave a comment!

Cheers -- Graham