Continuous Delivery with TFS / VSTS – Automated Acceptance Tests with SpecFlow and Selenium Part 2

In part-one of this two-part mini series I covered how to get acceptance tests written using Selenium working as part of the deployment pipeline. In that post the focus was on configuring the moving parts needed to get some existing acceptance tests up-and-running with the new Release Management tooling in TFS or VSTS. In this post I make good on my promise to explain how to use SpecFlow and Selenium together to write business readable web tests as opposed to tests that probably only make sense to a developer.

If you haven't used SpecFlow before then I highly recommend taking the time to understand what it can do. The SpecFlow website has a good getting started guide here however the best tutorial I have found is Jason Roberts' Automated Business Readable Web Tests with Selenium and SpecFlow Pluralsight course. Pluralsight is a paid-for service of course but if you don't have a subscription then you might consider taking up the offer of the free trial just to watch Jason's course.

As I started to integrate SpecFlow in to my existing Contoso University sample application for this post I realised that the way I had originally written the Selenium-based page object model using a fluent API didn't work well with SpecFlow. Consequently I re-wrote the code to be more in line with the style used in Jason's Pluralsight course. The versions are on GitHub -- you can find the ‘before' code here and the ‘after' code here. The instructions that follow are written from the perspective of someone updating the ‘before' code version.

Install SpecFlow Components

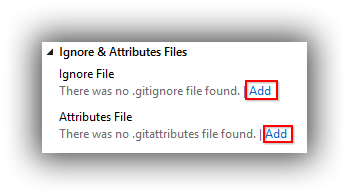

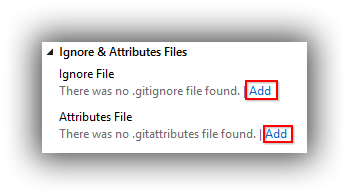

To support SpecFlow development, components need to be installed at two levels. With the Contoso University sample application open in Visual Studio (actually not necessary for the first item):

- At the Visual Studio application level the SpecFlow for Visual Studio 2015 extension should be installed.

- At the Visual Studio solution level the ContosoUniversity.Web.AutoTests project needs to have the SpecFlow NuGet package installed.

You may also find if using MSTest that the specFlow section of App.config in ContosoUniversity.Web.AutoTests needs to have an <unitTestProvider name="MsTest" /> element added.

Update the Page Object Model

In order to see all the changes I made to update my page object model to a style that worked well with SpecFlow please examine the ‘after' code here. To illustrate the style of my updated model, I created CreateDepartmentPage class in ContosoUniversity.Web.SeFramework with the following code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 |

using System; using OpenQA.Selenium; using OpenQA.Selenium.Support.PageObjects; namespace ContosoUniversity.Web.SeFramework { public class CreateDepartmentPage { [FindsBy(How = How.Id, Using = "Name")] private IWebElement _name; [FindsBy(How = How.Id, Using = "Budget")] private IWebElement _budget; [FindsBy(How = How.Id, Using = "StartDate")] private IWebElement _startDate; [FindsBy(How = How.Id, Using = "InstructorID")] private IWebElement _administrator; [FindsBy(How = How.XPath, Using = "//input[@value='Create']")] private IWebElement _submit; public CreateDepartmentPage() { PageFactory.InitElements(Driver.Instance, this); } public static CreateDepartmentPage NavigateTo() { Driver.Instance.Navigate().GoToUrl("http://" + Driver.BaseAddress + "/Department/Create"); return new CreateDepartmentPage(); } public string Name { set { _name.SendKeys(value); } } public decimal Budget { set { _budget.SendKeys(value.ToString()); } } public DateTime StartDate { set { _startDate.SendKeys(value.ToString()); } } public string Administrator { set { _administrator.SendKeys(value); } } public DepartmentsPage Create() { _submit.Click(); return new DepartmentsPage(); } } } |

The key difference is that rather than being a fluent API the model now consists of separate properties that more easily map to SpecFlow statements.

Add a Basic SpecFlow Test

To illustrate some of the power of SpecFlow we'll first add a basic test and then make some improvements to it. The test should be added to ContosoUniversity.Web.AutoTests -- if you are using my ‘before' code you'll want to delete the existing C# class files that contain the tests written for the earlier page object model.

- Right-click ContosoUniversity.Web.AutoTests and choose Add > New Item. Select SpecFlow Feature File and call it Department.feature.

- Replace the template text in Department.feature with the following:

|

|

Feature: Department In order to expand Contoso University As an administrator I want to be able to create a new Department Scenario: New Department Created Successfully Given I am on the Create Department page And I enter a randomly generated Department name And I enter a budget of £1400 And I enter a start date of today And I enter an administrator with name of Kapoor When I submit the form Then I should see a new department with the specified name |

- Right-click Department.feature in the code editor and choose Generate Step Definitions which will generate the following dialog:

- By default this will create a DepartmentSteps.cs file that you should save in ContosoUniversity.Web.AutoTests.

- DepartmentSteps.cs now needs to be fleshed-out with code that refers back to the page object model. The complete class is as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 |

using System; using TechTalk.SpecFlow; using ContosoUniversity.Web.SeFramework; using Microsoft.VisualStudio.TestTools.UnitTesting; namespace ContosoUniversity.Web.AutoTests { [Binding] public class DepartmentSteps { private CreateDepartmentPage _createDepartmentPage; private DepartmentsPage _departmentsPage; private string _departmentName; [Given(@"I am on the Create Department page")] public void GivenIAmOnTheCreateDepartmentPage() { _createDepartmentPage = CreateDepartmentPage.NavigateTo(); } [Given(@"I enter a randomly generated Department name")] public void GivenIEnterARandomlyGeneratedDepartmentName() { _departmentName = Guid.NewGuid().ToString(); _createDepartmentPage.Name = _departmentName; } [Given(@"I enter a budget of £(.*)")] public void GivenIEnterABudgetOf(int p0) { _createDepartmentPage.Budget = p0; } [Given(@"I enter a start date of today")] public void GivenIEnterAStartDateOfToday() { _createDepartmentPage.StartDate = DateTime.Today; } [Given(@"I enter an administrator with name of Kapoor")] public void GivenIEnterAnAdministratorWithNameOfKapoor() { _createDepartmentPage.Administrator = "Kapoor"; } [When(@"I submit the form")] public void WhenISubmitTheForm() { _departmentsPage = _createDepartmentPage.Create(); } [Then(@"I should see a new department with the specified name")] public void ThenIShouldSeeANewDepartmentWithTheSpecifiedName() { Assert.IsTrue(_departmentsPage.DoesDepartmentExistWithName(_departmentName)); } [BeforeScenario] public void Init() { Driver.Initialize(); } [AfterScenario] public void Cleanup() { Driver.Close(); } } } |

If you take a moment to examine the code you'll see the following features:

- The presence of methods with the BeforeScenario and AfterScenario attributes to initialise the test and clean up afterwards.

- Since we specified a value for Budget in Department.feature a method step with a (poorly named) parameter was created for reusability.

- Although we specified a name for the Administrator the step method wasn't parameterised.

As things stand though this test is complete and you should see a NewDepartmentCreatedSuccessfully test in Test Explorer which when run (don't forget IIS Express needs to be running) should turn green.

Refining the SpecFlow Test

We can make some improvements to DepartmentSteps.cs as follows:

- The GivenIEnterABudgetOf method can have its parameter renamed to budget.

- The GivenIEnterAnAdministratorWithNameOfKapoor method can be parameterised by changing as follows:

|

|

[Given(@"I enter an administrator with name of (.*)")] public void GivenIEnterAnAdministratorWithNameOf(string administrator) { _createDepartmentPage.Administrator = administrator; } |

In the preceding change note the change to both the attribute and the method name.

Updating the Build Definition

In order to start integrating SpecFlow tests in to the continuous delivery pipeline the first step is to update the build definition, specifically the AcceptanceTests artifact that was created in the previous post which needs amending to include TechTalk.SpecFlow.dll as a new item of the Contents property. A successful build should result in this dll appearing in the Artifacts Explorer window for the AcceptanceTests artifact:

Update the Test Plan with a new Test Case

If you are running your tests using the test assembly method then you should find that they just run without and further amendment. If on the other hand you are using the test plan method then you will need to remove the test cases based on the old Selenium tests and add a new test case (called New Department Created Successfully to match the scenario name) and edit it in Visual Studio to make it automated.

And Finally

Do be aware that I've only really scratched the surface in terms of what SpecFlow can do and there's plenty more functionality for you to explore. Whilst it's not really the subject of this post it's worth pointing out that when deciding to adopt acceptance tests as part of your continuous delivery pipeline it's worth doing so in a considered way. If you don't it's all too easy to wake up one day to realise you have hundreds of tests which take may hours to run and which require a significant amount of time to maintain. To this end do have a listen to Dave Farley's QCon talk on Acceptance Testing for Continuous Delivery.

Cheers -- Graham

Continuous Delivery with TFS / VSTS – Automated Acceptance Tests with SpecFlow and Selenium Part 1

In the previous post in this series we covered using Release Management to deploy PowerShell DSC scripts to target nodes that both configured the nodes for web and database roles and then deployed our sample application. With this done we are now ready to do useful work with our deployment pipeline, and the big task for many teams is going to be running automated acceptance tests to check that previously developed functionality still works as expected as an application undergoes further changes.

I covered how to create a page object model framework for running Selenium web tests in my previous blog series on continuous delivery here. The good news is that nothing much has changed and the code still runs fine, so to learn about how to create a framework please refer to this post. However one thing I didn't cover in the previous series was how to use SpecFlow and Selenium together to write business readable web tests and that's something I'll address in this series. Specifically, in this post I'll cover getting acceptance tests working as part of the deployment pipeline and in the next post I'll show how to integrate SpecFlow.

What We're Hoping to Achieve

The acceptance tests are written using Selenium which is able to automate ‘driving' a web browser to navigate to pages, fill in forms, click on submit buttons and so on. Whilst these tests are created on and thus able to run on developer workstations the typical scenario is that the number of tests quickly mounts making it impractical to run them locally. In any case running them locally is of limited use since what we really want to know is if checked-in code changes from team members have broken any tests.

The solution is to run the tests in an environment that is part of the deployment pipeline. In this blog series I call that the DAT (development automated test) environment, which is the first stage of the pipeline after the build process. As I've explained previously in this blog series, the DAT environment should be configured in such a way as to minimise the possibility of tests failing due to factors other than code issues. I solve this for web applications by having the database, web site and test browser all running on the same node.

Make Sure the Tests Work Locally

Before attempting to get automated tests running in the deployment pipeline it's a good idea to confirm that the tests are running locally. The steps for doing this (in my case using Visual Studio 2015 Update 2 on a workstation with FireFox already installed) are as follows:

- If you don't already have a working Contoso University sample application available:

- Download the code that accompanies this post from my GitHub site here.

- Unblock and unzip the solution to a convenient location and then build it to restore NuGet packages.

- In ContosoUniversity.Database open ContosoUniversity.publish.xml and then click on Publish to create the ContosoUniversity database in LocalDB.

- Run ContosoUniversity.Web (and in so doing confirm that Contoso University is working) and then leaving the application running in the browser switch back to Visual Studio and from the Debug menu choose Detatch All. This leaves IIS Express running which FireFox needs to be able to navigate to any of the application's URLs.

- From the Test menu navigate to Playlist > Open Playlist File and open AutoWebTests.playlist which lives under ContosoUniversity.Web.AutoTests.

- In Test Explorer two tests (Can_Navigate_To_Departments and Can_Create_Department) should now appear and these can be run in the usual way. FireFox should open and run each test which will hopefully turn green.

Edit the Build to Create an Acceptance Tests Artifact

The first step to getting tests running as part of the deployment pipeline is to edit the build to create an artifact containing all the files needed to run the tests on a target node. This is achieved by editing the ContosoUniversity.Rel build definition and adding a Copy Publish Artifact task. This should be configured as follows:

- Copy Root = $(build.stagingDirectory)

- Contents =

- ContosoUniversity.Web.AutoTests.*

- ContosoUniversity.Web.SeFramework.*

- Microsoft.VisualStudio.QualityTools.UnitTestFramework.*

- WebDriver.*

- Artifact Name = AcceptanceTests

- Artifact Type = Server

After queuing a successful build the AcceptanceTests artifact should appear on the build's Artifacts tab:

Edit the Release to Deploy the AcceptanceTests Artifact

Next up is copying the AcceptanceTests artifact to a target node -- in my case a server called PRM-DAT-AIO. This is no different from the previous post where we copied database and website artifacts and is a case of adding a Windows Machine File Copy task to the DAT environment of the ContosoUniversity release and configuring it appropriately:

Deploy a Test Agent

The good news for those of us working in the VSTS and TFS 2015 worlds is that test controllers are a thing of the past because Agents for Microsoft Visual Studio 2015 handle communicating with VSTS or TFS 2015 directly. The agent needs to be deployed to the target node and this is handled by adding a Visual Studio Test Agent Deployment task to the DAT environment. The configuration of this task is very straightforward (see here) however you will probably want to create a dedicated domain service account for the agent service to run under. The process is slightly different between VSTS and TFS 2015 Update 2.1 in that in VSTS the machine details can be entered directly in the task whereas in TFS there is a requirement to create a Test Machine Group.

Running Tests -- Test Assembly Method

In order to actually run the acceptance tests we need to add a Run Functional Tests task to the DAT pipeline directly after the Visual Studio Test Agent Deployment task. Examining this task reveals two ways to select the tests to be run -- Test Assembly or Test Plan. Test Assembly is the most straightforward and needs very little configuration:

- Test Machine Group (TFS) or Machines (VSTS) = Group name or $(TargetNode-DAT-AIO)

- Test Drop Location = $(TargetNodeTempFolder)\AcceptanceTests

- Test Selection = Test Assembly

- Test Assembly = **\*test*.dll

- Test Run Title = Acceptance Tests

As you will see though there are many more options that can be configured -- see the help page here for details.

Before you create a build to test these setting out you will need to make sure that the node where the tests are to be run from is specified in Driver.cs which lives in ContosoUniversity.Web.SeFramework. You will also need to ensure that FireFox is installed on this node. I've been struggling to reliably automate the installation of FireFox which turned out to be just as well because I was trying to automate the installation of the latest version from the Mozilla site. This turns out to be a bad thing because the latest version at time of writing (47.0) doesn't work with the latest (at time of writing) version of Selenium (2.53.0). Automation installation efforts for FireFox therefore need to centre around installing a Selenium-compatible version which makes things easier since the installer can be pre-downloaded to a known location. I ran out of time and installed FireFox 46.1 (compatible with Selenium 2.53.0) manually but this is something I'll revisit. Disabling automatic updates in FireFox is also essential to ensure you don't get out of sync with Selenum.

When you finally get your tests running you can see the results form the web portal by navigating to Test > Runs. You should hopefully see something similar to this:

Running Tests -- Test Plan Method

The first question you might ask about the Test Plan method is why bother if the Test Assembly method works? Of course, if the Test Assembly method gives you what you need then feel free to stick with that. However you might need to use the Test Plan method if a test plan already exists and you want to continue using it. Another reason is the possibility of more flexibility in choosing which tests to run. For example, you might organise your tests in to logical areas using static suites and then use query-based suites to choose subsets of tests, perhaps with the use of tags.

To use the Test Plan method, in the web portal navigate to Test > Test Plan and then:

- Use the green cross to create a new test plan called Acceptance Tests.

- Use the down arrow next to Acceptance Tests to create a New static suite called Department:

- Within the Department suite use the green cross to create two new test cases called Can_Navigate_To_Departments and Can_Create_Department (no other configuration necessary):

- Making a note of the test case IDs, switch to Visual Studio and in Team Explorer > Work Items search for each test case in turn to open it for editing.

- For each test case, click on Associated Automation (screenshot below is VSTS and looks slightly different from TFS) and then click on the ellipsis to bring up the Choose Test dialogue where you can choose the correct test for the test case:

- With everything saved switch back to the web portal Release hub and edit the Run Functional Tests task as follows:

- Test Selection = Test Plan

- Test Plan = Acceptance Tests

- Test Suite =Acceptance Tests\Department

With the configuration complete trigger a new release and if everything has worked you should be able to navigate to Test > Runs and see output similar to the Test Assembly method.

That's it for now. In the next post in this series I'll look at adding SpecFlow in to the mix to make the acceptance tests business readable.

Cheers -- Graham

Continuous Delivery with TFS / VSTS – Server Configuration and Application Deployment with Release Management

At this point in my blog series on Continuous Delivery with TFS / VSTS we have finally reached the stage where we are ready to start using the new web-based release management capabilities of VSTS and TFS. The functionality has been in VSTS for a little while now but only came to TFS with Update 2 of TFS 2015 which was released at the end of March 2016.

Don't tell my wife but I'm having a torrid love affair with the new TFS / VSTS Release Management. It's flippin' brilliant! Compared to the previous WPF desktop client it's a breath of fresh air: easy to understand, quick to set up and a joy to use. Sure there are some improvements that could be made (and these will come in time) but for the moment, for a relatively new product, I'm finding the experience extremely agreeable. So let's crack on!

Setting the Scene

The previous posts in this series set the scene for this post but I'll briefly summarise here. We'll be deploying the Contoso University sample application which consists of an ASP.NET MVC website and a SQL Server database which I've converted to a SQL Server Database Project so deployment is by DACPAC. We'll be deploying to three environments (DAT, DQA and PRD) as I explain here and not only will we be deploying the application we'll first be making sure the environments are correctly configured with PowerShell DSC using an adaptation of the procedure I describe here.

My demo environment in Azure is configured as a Windows domain and includes an instance of TFS 2015 Update 2 which I'll be using for this post as it's the lowest common denominator, although I will point out any VSTS specifics where needed. We'll be deploying to newly minted Windows Server 2012 R2 VMs which have been joined to the domain, configured with WMF 5.0 and had their domain firewall turned off -- see here for details. (Note that if you are using versions of Windows server earlier than 2012 that don't have remote management turned on you have a bit of extra work to do.) My TFS instance is hosting the build agent and as such the agent can ‘see' all the machines in the domain. I'm using Integrated Security to allow the website to talk to the database and use three different domain accounts (CU-DAT, CU-DQA and CU-PRD) to illustrate passing different credentials to different environments. I assume you have these set up in advance.

As far as development tools are concerned I'm using Visual Studio 2015 Update 2 with PowerShell Tools installed and Git for version control within a TFS / VSTS team project. It goes without saying that for each release I'm building the application only once and as part of the build any environment-specific configuration is replaced with tokens. These tokens are replaced with the correct values for that environment as that same tokenised build moves through the deployment pipeline.

Writing Server Configuration Code Alongside Application Code

A key concept I am promoting in this blog post series is that configuring the servers that your application will run on should not be an afterthought and neither should it be a manual click-through-GUI process. Rather, you should be configuring your servers through code and that code should be written at the same time as you write your application code. Furthermore the server configuration code should live with your application code. To start then we need to configure Contoso University for this way of working. If you are following along you can get the starting point code from here.

- Open the ContosoUniversity solution in Visual Studio and add new folders called Deploy to the ContosoUniversity.Database and ContosoUniversity.Web projects.

- In ContosoUniversity.Database\Deploy create two new files: Database.ps1 and DbDscResources.ps1. (Note that SQL Server Database Projects are a bit fussy about what can be created in Visual Studio so you might need to create these files in Windows Explorer and add them in as new items.)

- Database.ps1 should contain the following code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 |

[CmdletBinding()] param( [Parameter(Position=1)] [string]$domainSqlServerSetupLogin, [Parameter(Position=2)] [string]$domainSqlServerSetupPassword, [Parameter(Position=3)] [string]$sqlServerSaPassword, [Parameter(Position=4)] [string]$domainUserForIntegratedSecurityLogin ) <# Password parameters included intentionally to check for environment cloning errors where failure to explicitly set the password in a cloned environment causes an off-by-one error which these outputs can help track down #> Write-Verbose "The value of parameter `$domainSqlServerSetupLogin is $domainSqlServerSetupLogin" -Verbose Write-Verbose "The value of parameter `$domainSqlServerSetupPassword is $domainSqlServerSetupPassword" -Verbose Write-Verbose "The value of parameter `$sqlServerSaPassword is $sqlServerSaPassword" -Verbose Write-Verbose "The value of parameter `$domainUserForIntegratedSecurityLogin is $domainUserForIntegratedSecurityLogin" -Verbose $domainSqlServerSetupCredential = New-Object System.Management.Automation.PSCredential ($domainSqlServerSetupLogin, (ConvertTo-SecureString -String $domainSqlServerSetupPassword -AsPlainText -Force)) $sqlServerSaCredential = New-Object System.Management.Automation.PSCredential ("sa", (ConvertTo-SecureString -String $sqlServerSaPassword -AsPlainText -Force)) $configurationData = @{ AllNodes = @( @{ NodeName = $env:COMPUTERNAME PSDscAllowDomainUser = $true PSDscAllowPlainTextPassword = $true DomainSqlServerSetupCredential = $domainSqlServerSetupCredential SqlServerSaCredential = $sqlServerSaCredential DomainUserForIntegratedSecurityLogin = $domainUserForIntegratedSecurityLogin } ) } Configuration Database { Import-DscResource –ModuleName PSDesiredStateConfiguration Import-DscResource -ModuleName @{ModuleName="xSQLServer";ModuleVersion="1.5.0.0"} Import-DscResource -ModuleName @{ModuleName="xDatabase";ModuleVersion="1.4.0.0"} Import-DscResource -ModuleName @{ModuleName="xReleaseManagement";ModuleVersion="1.0.0.0"} Node $AllNodes.NodeName { WindowsFeature "NETFrameworkCore" { Ensure = "Present" Name = "NET-Framework-Core" } xSqlServerSetup "SQLServerEngine" { DependsOn = "[WindowsFeature]NETFrameworkCore" SourcePath = "\\prm-core-dc\DscInstallationMedia" SourceFolder = "SqlServer2014" SetupCredential = $Node.DomainSqlServerSetupCredential InstanceName = "MSSQLSERVER" Features = "SQLENGINE" SecurityMode = "SQL" SAPwd = $Node.SqlServerSaCredential } xDatabase DeployDac { DependsOn = "[xSqlServerSetup]SQLServerEngine" Ensure = "Present" SqlServer = $Node.Nodename SqlServerVersion = "2014" DatabaseName = "ContosoUniversity" Credentials = $Node.SqlServerSaCredential DacPacPath = "C:\temp\Database\ContosoUniversity.Database.dacpac" DacPacApplicationName = "ContosoUniversity.Database" } xTokenize ReplacePermissionsScriptConfigTokens { DependsOn = "[xDatabase]DeployDac" recurse = $false tokens = @{LOGIN_OR_USER = $Node.DomainUserForIntegratedSecurityLogin; DB_NAME = "ContosoUniversity"} useTokenFiles = $false path = "C:\temp\Database\Deploy" searchPattern = "*.sql" } Script ApplyPermissions { DependsOn = "[xTokenize]ReplacePermissionsScriptConfigTokens" SetScript = { $cmd= "& 'C:\Program Files\Microsoft SQL Server\Client SDK\ODBC\110\Tools\Binn\sqlcmd.exe' -S localhost -i 'C:\temp\Database\Deploy\Create login and database user.sql' " Invoke-Expression $cmd } TestScript = { $false } GetScript = { @{ Result = "" } } } # Configure for debugging / development mode only #xSqlServerSetup "SQLServerManagementTools" #{ # DependsOn = "[WindowsFeature]NETFrameworkCore" # SourcePath = "\\prm-core-dc\DscInstallationMedia" # SourceFolder = "SqlServer2014" # SetupCredential = $Node.DomainSqlServerSetupCredential # InstanceName = "NULL" # Features = "SSMS,ADV_SSMS" #} } } Database -ConfigurationData $configurationData |

- DbDscResources.ps1 should contain the following code:

|

|

$customModulesDestination = Join-Path $env:SystemDrive "\Program Files\WindowsPowerShell\Modules" # Modules need to have been copied to this UNC from a machine where they were installed $customModulesSource = "\\prm-core-dc\DscResources" Copy-Item -Verbose -Force -Recurse -Path (Join-Path $customModulesSource xSqlServer) -Destination $customModulesDestination Copy-Item -Verbose -Force -Recurse -Path (Join-Path $customModulesSource xDatabase) -Destination $customModulesDestination Copy-Item -Verbose -Force -Recurse -Path (Join-Path $customModulesSource xReleaseManagement) -Destination $customModulesDestination |

- In ContosoUniversity.Web\Deploy create two new files: Website.ps1 and WebDscResources.ps1.

- Website.ps1 should contain the following code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 |

[CmdletBinding()] param( [Parameter(Position=1)] [string]$domainUserForIntegratedSecurityLogin , [Parameter(Position=2)] [string]$domainUserForIntegratedSecurityPassword, [Parameter(Position=3)] [string]$sqlServerName ) <# Password parameters included intentionally to check for environment cloning errors where failure to explicitly set the password in a cloned environment causes an off-by-one error which these outputs can help track down #> Write-Verbose "The value of parameter `$domainUserForIntegratedSecurityLogin is $domainUserForIntegratedSecurityLogin" -Verbose Write-Verbose "The value of parameter `$domainUserForIntegratedSecurityPassword is $domainUserForIntegratedSecurityPassword" -Verbose Write-Verbose "The value of parameter `$sqlServerName is $sqlServerName" -Verbose $domainUserForIntegratedSecurityCredential = New-Object System.Management.Automation.PSCredential ($domainUserForIntegratedSecurityLogin, (ConvertTo-SecureString -String $domainUserForIntegratedSecurityPassword -AsPlainText -Force)) $configurationData = @{ AllNodes = @( @{ NodeName = $env:COMPUTERNAME DomainUserForIntegratedSecurityLogin = $domainUserForIntegratedSecurityLogin DomainUserForIntegratedSecurityCredential = $domainUserForIntegratedSecurityCredential SqlServerName = $sqlServerName PSDscAllowDomainUser = $true PSDscAllowPlainTextPassword = $true } ) } Configuration Web { Import-DscResource –ModuleName PSDesiredStateConfiguration Import-DscResource –ModuleName @{ModuleName="cWebAdministration";ModuleVersion="2.0.1"} Import-DscResource -ModuleName @{ModuleName="xWebAdministration";ModuleVersion="1.10.0.0"} Import-DscResource -ModuleName @{ModuleName="xReleaseManagement";ModuleVersion="1.0.0.0"} Node $AllNodes.NodeName { # Configure for web server role WindowsFeature DotNet45Core { Ensure = 'Present' Name = 'NET-Framework-45-Core' } WindowsFeature IIS { Ensure = 'Present' Name = 'Web-Server' } WindowsFeature AspNet45 { Ensure = "Present" Name = "Web-Asp-Net45" } # Only turn off whilst sorting out the web files - needs to be on for rest of script to work Script StopIIS { DependsOn = "[WindowsFeature]IIS" SetScript = { Stop-Service W3SVC } TestScript = { $false } GetScript = { @{ Result = "" } } } # Make sure the web folder has the latest website files xTokenize ReplaceWebConfigTokens { Recurse = $false Tokens = @{DATA_SOURCE = $Node.SqlServerName; INITIAL_CATALOG = "ContosoUniversity"} UseTokenFiles = $false Path = "C:\temp\website" SearchPattern = "web.config" } Script DeleteExisitngWebsiteFilesSoAbsolutelyCertainAllFilesComeFromTheBuild { DependsOn = "[xTokenize]ReplaceWebConfigTokens" SetScript = { Remove-Item "C:\inetpub\ContosoUniversity" -Force -Recurse -ErrorAction SilentlyContinue } TestScript = { $false } GetScript = { @{ Result = "" } } } File CopyWebsiteFiles { DependsOn = "[Script]DeleteExisitngWebsiteFilesSoAbsolutelyCertainAllFilesComeFromTheBuild" Ensure = "Present" Force = $true Recurse = $true Type = "Directory" SourcePath = "C:\temp\website" DestinationPath = "C:\inetpub\ContosoUniversity" } File RemoveDeployFolder { DependsOn = "[File]CopyWebsiteFiles" Ensure = "Absent" Force = $true Type = "Directory" DestinationPath = "C:\inetpub\ContosoUniversity\Deploy" } Script StartIIS { DependsOn = "[File]RemoveDeployFolder" SetScript = { Start-Service W3SVC } TestScript = { $false } GetScript = { @{ Result = "" } } } # Configure custom app pool xWebAppPool ContosoUniversity { DependsOn = "[WindowsFeature]IIS" Ensure = "Present" Name = "ContosoUniversity" State = "Started" } cAppPool ContosoUniversity { DependsOn = "[xWebAppPool]ContosoUniversity" Name = "ContosoUniversity" IdentityType = "SpecificUser" UserName = $Node.DomainUserForIntegratedSecurityLogin Password = $Node.DomainUserForIntegratedSecurityCredential } # Advanced configuration xWebsite ContosoUniversity { DependsOn = "[cAppPool]ContosoUniversity" Ensure = "Present" Name = "ContosoUniversity" State = "Started" PhysicalPath = "C:\inetpub\ContosoUniversity" BindingInfo = MSFT_xWebBindingInformation { Protocol = 'http' Port = '80' HostName = $Node.NodeName IPAddress = '*' } ApplicationPool = "ContosoUniversity" } # Clean up the uneeded website and application pools xWebsite Default { Ensure = "Absent" Name = "Default Web Site" } xWebAppPool NETv45 { Ensure = "Absent" Name = ".NET v4.5" } xWebAppPool NETv45Classic { Ensure = "Absent" Name = ".NET v4.5 Classic" } xWebAppPool Default { Ensure = "Absent" Name = "DefaultAppPool" } File wwwroot { Ensure = "Absent" Type = "Directory" DestinationPath = "C:\inetpub\wwwroot" Force = $True } # Configure for debugging / development mode only #WindowsFeature IISTools #{ # Ensure = "Present" # Name = "Web-Mgmt-Tools" #} } } Web -ConfigurationData $configurationData |

- WebDscResources.ps1 should contain the following code:

|

|

$customModulesDestination = Join-Path $env:SystemDrive "\Program Files\WindowsPowerShell\Modules" # Modules need to have been copied to this UNC from a machine where they were installed $customModulesSource = "\\prm-core-dc\DscResources" Copy-Item -Verbose -Force -Recurse -Path (Join-Path $customModulesSource xWebAdministration) -Destination $customModulesDestination Copy-Item -Verbose -Force -Recurse -Path (Join-Path $customModulesSource cWebAdministration) -Destination $customModulesDestination Copy-Item -Verbose -Force -Recurse -Path (Join-Path $customModulesSource xReleaseManagement) -Destination $customModulesDestination |

- In ContosoUniversity.Database\Scripts move Create login and database user.sql to the Deploy folder and remove the Scripts folder.

- Make sure all these files have their Copy to Output Directory property set to Copy always. For the files in ContosoUniversity.Database\Deploy the Build Action property should be set to None.

The Database.ps1 and Website.ps1 scripts contain the PowerShell DSC to both configure servers for either IIS or SQL Server and then to deploy the actual component. See my Server Configuration as Code with PowerShell DSC post for more details. (At the risk of jumping ahead to the deployment part of this post, the bits to be deployed are copied to temp folders on the target nodes -- hence references in the scripts to C:\temp\$whatever$.)

In the case of the database component I'm using the xDatabase custom DSC resource to deploy the DACPAC. I came across a problem with this resource where it wouldn't install the DACPAC using domain credentials, despite the credentials having the correct permissions in SQL Server. I ended up having to install SQL Server using Mixed Mode authentication and installing the DACPAC using the sa login. I know, I know!

My preferred technique for deploying website files is plain xcopy. For me the requirement is to clear the old files down and replace them with the new ones. After some experimentation I ended up with code to stop IIS, remove the web folder, copy the new web folder from its temp location and then restart IIS.

Both the database and website have files with configuration tokens that needed replacing as part of the deployment. I'm using the xReleaseManagement custom DSC resource which takes a hash table of tokens (in the __TOKEN_NAME__ format) to replace.

In order to use custom resources on target nodes the custom resources need to be in place before attempting to run a configuration. I had hoped to use a push server technique for this but it was not to be since for this post at least I'm running the DSC configurations on the actual target nodes and the push server technique only works if the MOF files are created on a staging machine that has the custom resources installed. Instead I'm copying the custom resources to the target nodes just prior to running the DSC configurations and this is the purpose of the DbDscResources.ps1 and WebDscResources.ps1 files. The custom resources live on a UNC that is available to target nodes and get there by simply copying them from a machine where they have been installed (C:\Program Files\WindowsPowerShell\Modules is the location) to the UNC.

Create a Release Build

With the Visual Studio now configured (don't forget to commit the changes) we now need to create a build to check that initial code quality checks have passed and if so to publish the database and website components ready for deployment. Create a new build definition called ContosoUniversity.Rel and follow this post to configure the basics and this post to create a task to run unit tests. Note that for the Visual Studio Build task the MSBuild Arguments setting is /p:OutDir=$(build.stagingDirectory) /p:UseWPP_CopyWebApplication=True /p:PipelineDependsOnBuild=False /p:RunCodeAnalysis=True. This gives us a _PublishedWebsites\ContosoUniversity.Web folder (that contains all the web files that need to be deployed) and also runs the transformation to tokensise Web.config. Additionally, since we are outputting to $(build.stagingDirectory) the Test Assembly setting of the Visual Studio Test task needs to be $(build.stagingDirectory)\**\*UnitTests*.dll;-:**\obj\**. At some point we'll want to version our assemblies but I'll return to that in a another post.

One important step that has changed since my earlier posts is that the Restore NuGet Packages option in the Visual Studio Build task has been deprecated. The new way of doing this is to add a NuGet Installer task as the very first item and then in the Visual Studio Build task (in the Advanced section in VSTS) uncheck Restore NuGet Packages.

To publish the database and website as components -- or Artifacts (I'm using the TFS spelling) as they are known -- we use the Copy and Publish Build Artifacts tasks. The database task should be configured as follows:

- Copy Root = $(build.stagingDirectory)

- Contents =

- ContosoUniversity.Database.d*

- Deploy\Database.ps1

- Deploy\DbDscResources.ps1

- Deploy\Create login and database user.sql

- Artifact Name = Database

- Artifact Type = Server

Note that the Contents setting can take multiple entries on separate lines and we use this to be explicit about what the database artifact should contain. The website task should be configured as follows:

- Copy Root = $(build.stagingDirectory)\_PublishedWebsites

- Contents = **\*

- Artifact Name = Website

- Artifact Type = Server

Because we are specifying a published folder of website files that already has the Deploy folder present there's no need to be explicit about our requirements. With all this done the build should look similar to this:

In order to test the successful creation of the artifacts, queue a build and then -- assuming the build was successful -- navigate to the build and click on the Artifacts link. You should see the Database and Website artifact folders and you can examine the contents using the Explore link:

Create a Basic Release

With the artifacts created we can now turn our attention to creating a basic release to get them copied on to a target node and then perform a deployment. Switch to the Release hub in the web portal and use the green cross icon to create a new release definition. The Deployment Templates window is presented and you should choose to start with an Empty template. There are four immediate actions to complete:

- Provide a Definition name -- ContosoUniversity for example.

- Change the name of the environment that has been added to DAT.

- Click on Link to a build definition to link the release to the ContosoUniversity.Rel build definition.

- Save the definition.

Next up we need to add two Windows Machine File Copy tasks to copy each artifact to one node called PRM-DAT-AIO. (As a reminder the DAT environment as I define it is just one server which hosts both the website and the database and where automated testing takes place.) Although it's possible to use just one task here the result of selecting artifacts differs according to the selected node in the artifact tree. At the node root, folders are created for each artifact but go one node lower and they aren't. I want a procedure that works for all environments which is as follows:

- Click on Add tasks to bring up the Add Tasks window. Use the Deploy link to filter the list of tasks and Add two Windows Machine File Copy tasks:

- Configure the properties of the tasks as follows:

- Edit the names (use the pencil icon) to read Copy Database files and Copy Website files respectively.

- Source = $(System.DefaultWorkingDirectory)/ContosoUniversity.Rel/Database or $(System.DefaultWorkingDirectory)/ContosoUniversity.Rel/Website accordingly (use the ellipsis to select)

- Machines = PRM-DAT-AIO.prm.local

- Admin login = Supply a domain account login that has admin privileges for PRM-DAT-AIO.prm.local

- Password = Password for the above domain account

- Destination folder = C:\temp\Database or C:\temp\Website accordingly

- Advanced Options > Clean Target = checked

- Click the ellipsis in the DAT environment and choose Deployment conditions.

- Change the Trigger to After release creation and click OK to accept.

- Save the changes and trigger a release using the green cross next to Release. You'll be prompted to select a build as part of the process:

- If the release succeeds a C:\temp folder containing the artifact folders will have been created on on PRM-DAT-AIO.

- If the release fails switch to the Logs tab to troubleshoot. Permissions and whether the firewall has been configured to allow WinRM are the likely culprits. To preserve my sanity I do everything as domain admin and I have the domain firewall turned off. The usual warnings about these not necessarily being best practices in non-test environments apply!

Whilst you are checking the C:\temp folder on the target node have a look inside the artifact folders. They should both contain a Deploy folder that contains the PowerShell scripts that will be executed remotely using the PowerShell on Target Machines task. You'll need to configure two of each for the two artifacts as follows:

- Add two PowerShell on Target Machines tasks to alternately follow the Windows Machine File Copy tasks.

- Edit the names (use the pencil icon) to read Configure Database and Configure Website respectively.

- Configure the properties of the task as follows:

- Machines = PRM-DAT-AIO.prm.local

- Admin login = Supply a domain account that has admin privileges for PRM-DAT-AIO.prm.local

- Password = Password for the above domain account

- Protocol = HTTP

- Deployment > PowerShell Script = C:\temp\Database\Deploy\Database.ps1 or C:\temp\Website\Deploy\Website.ps1 accordingly

- Deployment > Initialization Script = C:\temp\Database\Deploy\DbDscResources.ps1 or C:\temp\Website\Deploy\WebDscResources.ps1 accordingly

- With reference to the parameters required by C:\temp\Database\Deploy\Database.ps1 configure Deployment > Script Arguments for the Database task as follows:

- $domainSqlServerSetupLogin = Supply a domain login that has privileges to install SQL Server on PRM-DAT-AIO.prm.local

- $domainSqlServerSetupPassword = Password for the above domain login

- $sqlServerSaPassword = Password you want to use for the SQL Server sa account

- $domainUserForIntegratedSecurityLogin = Supply a domain login to use for integrated security (PRM\CU-DAT in my case for the DAT environment)

- The finished result will be similar to: ‘PRM\Graham' ‘YourSecurePassword' ‘YourSecurePassword' ‘PRM\CU-DAT'

- With reference to the parameters required by C:\temp\Website\Deploy\Website.ps1 configure Deployment > Script Arguments for the Website task as follows:

- $domainUserForIntegratedSecurityLogin = Supply a domain login to use for integrated security (PRM\CU-DAT in my case for the DAT environment)

- $domainUserForIntegratedSecurityPassword = Password for the above domain account

- $sqlServerName = machine name for the SQL Server instance (PRM-DAT-AIO in my case for the DAT environment)

- The finished result will be similar to: ‘PRM\CU-DAT' ‘YourSecurePassword' ‘PRM-DAT-AIO'

At this point you should be able to save everything and the release should look similar to this:

Go ahead and trigger a new release. This should result in the PowerShell scripts being executed on the target node and IIS and SQL Server being installed, as well as the Contoso University application. You should be able to browse the application at http://prm-dat-aio. Result!

Variable Quality

Although we now have a working release for the DAT environment it will hopefully be obvious that there are serious shortcomings with the way we've configured the release. Passwords in plain view is one issue and repeated values is another. The latter issue is doubly of concern when we start creating further environments.

The answer to this problem is to create custom variables at both a ‘release' level and and at the ‘environment' level. Pretty much every text box seems to take a variable so you can really go to town here. It's also possible to create compound values based on multiple variables -- I used this to separate the location of the C:\temp folder from the rest of the script location details. It's worth having a bit of a think about your variable names in advance of using them because if you change your mind you'll need to edit every place they were used. In particular, if you edit the declaration of secret variables you will need to click the padlock to clear the value and re-enter it. This tripped me up until I added Write-Verbose statements to output the parameters in my DSC scripts and realised that passwords were not being passed through (they are asterisked so there is no security concern). (You do get the scriptArguments as output to the console but I find having them each on a separate line easier.)

Release-level variables are created in the Configuration section and if they are passwords can be secured as secrets by clicking the padlock icon. The release-level variables I created are as follows:

Environment-level variables are created by clicking the ellipsis in the environment and choosing Configure Variables. I created the following:

The variables can then be used to reconfigure the release as per this screen shot which shows the PowerShell on Target Machines Configure Database task:

The other tasks are obviously configured in a similar way, and notice how some fields use more than one variable. Nothing has a actually changed by replacing hard-coded values with variables so triggering another release should be successful.

Environments Matter

With a successful deployment to the DAT environment we can now turn our attention to the other stages of the deployment pipeline -- DQA and PRD. The good news here is that all the work we did for DAT can be easily cloned for DQA which can then be cloned for PRD. Here's the procedure for DQA which don't forget is a two-node deployment:

- In the Configuration section create two new release level variables:

- TargetNode-DQA-SQL = PRM-DQA-SQL.prm.local

- TargetNode-DQA-IIS = PRM-DQA-IIS.prm.local

- In the DAT environment click on the ellipsis and select Clone environment and name it DQA.

- Change the two database tasks so the Machines property is $(TargetNode-DQA-SQL).

- Change the two website tasks so the Machines property is $(TargetNode-DQA-IIS).

- In the DQA environment click on the ellipsis and select Configure variables and make the following edits:

- Change DomainUserForIntegratedSecurityLogin to PRM\CU-DQA

- Click on the padlock icon for the DomainUserForIntegratedSecurityPassword variable to clear it then re-enter the password and click the padlock icon again to make it a secret. Don't miss this!

- Change SqlServerName to PRM-DQA-SQL

- In the DQA environment click on the ellipsis and select Deployment conditions and set Trigger to No automated deployment.

With everything saved and assuming the PRM-DQA-SQL and PRM-DQA-SQL nodes are running the release can now be triggered. Assuming the deployment to DAT was successful the release will wait for DQA to be manually deployed (almost certainly what is required as manual testing could be going on here):

To keep things simple I didn't assign any approvals for this release (ie they were all automatic) but do bear in mind there is some rich and flexible functionality available around this. If all is well you should be able to browse Contoso University on http://prm-dqa-iis. I won't describe cloning DQA to create PRD as it's very similar to the process above. Just don't forget to re-enter cloned password values! Do note that in the Environment Variables view of the Configuration section you can view and edit (but not create) the environment-level variables for all environments:

This is a great way to check that variables are the correct values for the different environments.

And Finally...

There's plenty more functionality in Release Management that I haven't described but that's as far as I'm going in this post. One message I do want to get across is that the procedure I describe in this post is not meant to be a statement on the definitive way of using Release Management. Rather, it's designed to show what's possible and to get you thinking about your own situation and some of the factors that you might need to consider. As just one example, if you only have one application then the Visual Studio solution for the application is probably fine for the DSC code that installs IIS and SQL Server. However if you have multiple similar applications then almost certainly you don't want all that code repeated in every solution. Moving this code to the point at which the nodes are created could be an option here -- or perhaps there is a better way!

That's it for the moment but rest assured there's lots more to be covered in this series. If you want the final code that accompanies this post I've created a release here on my GitHub site.

Cheers -- Graham

Continuous Delivery with TFS / VSTS – Infrastructure as Code with Azure Resource Manager Templates

So far in this blog post series on Continuous Delivery with TFS / VSTS we have gradually worked our way to the position of having a build of our application which is almost ready to be deployed to target servers (or nodes if you prefer) in order to conduct further testing before finally making its way to production. This brings us to the question of how these nodes should be provisioned and configured. In my previous series on continuous delivery deployment was to nodes that had been created and configured manually. However with the wealth of automation tools available to us we can -- and should -- improve on that. This post explains how to achieve the first of those -- provisioning a Windows Server virtual machine using Azure Resource Manager templates. A future post will deal with the configuration side of things using PowerShell DSC.

Before going further I should point out that this post is a bit different from my other posts in the sense that it is very specific to Azure. If you are attempting to implement continuous delivery in an on premises situation chances are that the specifics of what I cover here are not directly usable. Consequently, I'm writing this post in the spirit of getting you to think about this topic with a view to investigating what's possible for your situation. Additionally, if you are not in the continuous delivery space and have stumbled across this post through serendipity I do hope you will be able to follow along with my workflow for creating templates. Once you get past the Big Picture section it's reasonably generic and you can find the code that accompanies this post at my GitHub repository here.

The Infrastructure Big Picture

In order to understand where I am going with this post it's probably helpful to understand the big picture as it relates to this blog series on continuous delivery. Our final continuous delivery pipeline is going to consist of three environments:

- DAT -- development automated test where automated UI testing takes place. This will be an ‘all in one' VM hosting both SQL Server and IIS. Why have an all-in-one VM? It's because the purpose of this environment is to run automated tests, and if those tests fail we want a high degree of certainty that it was because of code and not any other factors such as network problems or a database timeout. To achieve that state of certainty we need to eliminate as many influencing variables as possible, and the simplest way of achieving that is to have everything running on the same VM. It breaks the rule about early environments reflecting production but if you are in an on premises situation and your VMs are on hand-me-down infrastructure and your network is busy at night (when your tests are likely running) backing up VMs and goodness knows what else then you might come to appreciate the need for an all-in-one VM for automated testing.

- DQA -- development quality assurance where high-value manual testing takes place. This really does need to reflect production so it will consist of a database VM and a web server VM.

- PRD -- production for the live code. It will consist of a database VM and a web server VM.

These environments map out to the following infrastructure I'll be creating in Azure:

- PRM-DAT -- resource group to hold everything for the DAT environment

- PRM-DAT-AIO -- all in one VM for the DAT environment

- PRM-DQA -- resource group to hold everything for the DQA environment

- PRM-DQA-SQL -- database VM for the DQA environment

- PRM-DQA-IIS -- web server VM for the DQA environment

- PRM-PRD -- resource group to hold everything for the DQA environment

- PRM-PRD-SQL -- database VM for the PRD environment

- PRM-PRD-IIS -- web server VM for the PRD environment

The advantage of using resource groups as containers is that an environment can be torn down very easily. This makes more sense when you realise that it's not just the VM that needs tearing down but also storage accounts, network security groups, network interfaces and public IP addresses.

Overview of the ARM Template Development Workflow

We're going to be creating our infrastructure using ARM templates which is a declarative approach, ie we declare what we want and some other system ‘makes it so'. This is in contrast to an imperative approach where we specify exactly what should happen and in what order. (We can use an imperative approach with ARM using PowerShell but we don't get any parallelisation benefits.) If you need to get up to speed with ARM templates I have a Getting Started blog post with a collection useful useful links here. The problem -- for me at least -- is that although Microsoft provide example templates for creating a Windows Server VM (for instance) they are heavily parametrised and designed to work as standalone VMs, and it's not immediately obvious how they can fit in to an existing network. There's also the issue that at first glance all that JSON can look quite intimidating! Fear not though, as I have figured out what I hope is a great workflow for creating ARM templates which is both instructive and productive. It brings together a number of tools and technologies and I make the assumption that you are familiar with these. If not I've blogged about most of them before. A summary of the workflow steps with prerequisites and assumptions is as follows:

- Create a Model VM in Azure Portal. The ARM templates that Microsoft provide tend to result in infrastructure that have different internal names compared with the same infrastructure created through the Azure Portal. I like how the portal names things and in order to help replicate that naming convention for VMs I find it useful to create a model VM in the portal whose components I can examine via the Azure Resource Explorer.

- Create a Visual Studio Solution. Probably the easiest way to work with ARM templates is in Visual Studio. You'll need the Azure SDK installed to see the Azure Resource Group project template -- see here for more details. We'll also be using Visual Studio to deploy the templates using PowerShell and for that you'll need the PowerShell Tools for Visual Studio extension. If you are new to this I have a Getting Started blog post here. We'll be using Git in either TFS or VSTS for version control but if you are following this series we've already covered that.

- Perform an Initial Deployment. There's nothing worse than spending hours coding only to find that what you're hoping to do doesn't work and that the problem is hard to trace. The answer of course is to deploy early and that's the purpose of this step.

- Build the Deployment Template Resource by Resource Using Hard-coded Values. The Microsoft templates really go to town when it comes to implementing variables and parameters. That level of detail isn't required here but it's hard to see just how much is required until the template is complete. My workflow involves using hard-coded values initially so the focus can remain on getting the template working and then refactoring later.

- Refactor the Template with Parameters, Variables and Functions. For me refactoring to remove the hard-coded values is one of most fun and rewarding parts of the process. There's a wealth of programming functionality available in ARM templates -- see here for all the details.

- Use the Template to Create Multiple VMs. We've proved the template can create a single VM -- what about multiple VMs? This section explores the options.

That's enough overview -- time to get stuck in!

Create a Model VM in Azure Portal

As above, the first VM we'll create using an ARM template is going to be called PRM-DAT-AIO in a resource group called PRM-DAT. In order to help build the template we'll create a model VM called PRM-DAT-AAA in a resource group called PRM-DAT via the Azure Portal. The procedure is as follows:

- Create a resource group called PRM-DAT in your preferred location -- in my case West Europe.

- Create a standard (Standard-LRS) storage account in the new resource group -- I named mine prmdataaastorageaccount. Don't enable diagnostics.

- Create a Windows Server 2012 R2 Datacenter VM (size right now doesn't matter much -- I chose Standard DS1 to keep costs down) called PRM-DAT-AAA based on the PRM-DAT resource group, the prmdataaastorageaccount storage account and the prmvirtualnetwork that was created at the beginning of this blog series as the common virtual network for all VMs. Don't enable monitoring.

- In Public IP addresses locate PRM-DAT-AAA and under configuration set the DNS name label to prm-dat-aaa.

- In Network security groups locate PRM-DAT-AAA and add the following tag: displayName : NetworkSecurityGroup.

- In Network interfaces locate PRM-DAT-AAAnnn (where nnn represents any number) and add the following tag: displayName : NetworkInterface.

- In Public IP addresses locate PRM-DAT-AAA and add the following tag: displayName : PublicIPAddress.

- In Storage accounts locate prmdataaastorageaccount and add the following tag: displayName : StorageAccount.

- In Virtual machines locate PRM-DAT-AAA and add the following tag: displayName : VirtualMachine.

You can now explore all the different parts of this VM in the Azure Resource Explorer. For example, the public IP address should look similar to:

Create a Visual Studio Solution

We'll be building and running our ARM template in Visual Studio. You may want to refer to previous posts (here and here) as a reminder for some of the configuration steps which are as follows:

- In the Web Portal navigate to your team project and add a new Git repository called Infrastructure.

- In Visual Studio clone the new repository to a folder called Infrastructure at your preferred location on disk.

- Create a new Visual Studio Solution (not project!) called Infrastructure one level higher then the Infrastructure folder. This effectively stops Visual Studio from creating an unwanted folder.

- Add .gitignore and .gitattributes files and perform a commit.

- Add a new Visual Studio Project to the solution of type Azure Resource Group called DeploymentTemplates. When asked to select a template choose anything.

- Delete the Scripts, Templates and Tools folders from the project.

- Add a new project to the solution of type PowerShell Script Project called DeploymentScripts.

- Delete Script.ps1 from the project.

- In the DeploymentTemplates project add a new Azure Resource Manager Deployment Project item called WindowsServer2012R2Datacenter.json (spaces not allowed).

- In the DeploymentScripts project add a new PowerShell Script item for the PowerShell that will create the PRM-DAT resource group with a PRM-DAT-AIO server -- I called my file Create PRM-DAT.ps1.

- Perform a commit and sync to get everything safely under version control.

With all that configuration you should have a Visual Studio solution looking something like this:

Perform an Initial Deployment

It's now time to write just enough code in Create PRM-DAT.ps1 to prove that we can initiate a deployment from PowerShell. First up is the code to authenticate to Azure PowerShell. I have the authentication code which was the output of this post wrapped in a function called Set-AzureRmAuthenticationForMsdnEnterprise which in turn is contained in a PowerShell module file called Authentication.psm1. This file in turn is deployed to C:\Users\Graham\Documents\WindowsPowerShell\Modules\Authentication which then allows me to call Set-AzureRmAuthenticationForMsdnEnterprise from anywhere on my development machine. (Although this function could clearly be made more generic with the use of some parameters I've consciously chosen not to so I can check my code in to GitHub without worrying about exposing any authentication details.) The initial contents of Create PRM-DAT.ps1 should end up looking as follows:

|

|

# Authentication details are abstracted away in a PS module Set-AzureRmAuthenticationForMsdnEnterprise # Make sure we can get the root of the solution wherever it's installed to $solutionRoot = Split-Path -Path $MyInvocation.MyCommand.Definition -Parent # Always need the resource group to be present New-AzureRmResourceGroup -Name PRM-DAT -Location westeurope -Force # Deploy the contents of the template New-AzureRmResourceGroupDeployment -ResourceGroupName PRM-DAT ` -TemplateFile "$solutionRoot\..\DeploymentTemplates\WindowsServer2012R2Datacenter.json" ` -Force -Verbose |

Running this code in Visual Studio should result in a successful outcome, although admittedly not much has happened because the resource group already existed and the deployment template is empty. Nonetheless, it's progress!

Build the Deployment Template Resource by Resource Using Hard-coded Values

The first resource we'll code is a storage account. In the DeploymentTemplates project open WindowsServer2012R2Datacenter.json which as things stand just contains some boilerplate JSON for the different sections of the template that we'll be completing. What you should notice is the JSON Outline window is now available to assist with editing the template. Right-click resources and choose Add New Resource:

In the Add Resource window find Storage Account and add it with the name (actually the display name) of StorageAccount:

This results in boilerplate JSON being added to the template along with a variable for actual storage account name and a parameter for account type. We'll use a variable later but for now delete the variable and parameter that was added -- you can either use the JSON Outline window or manually edit the template.

We now need to edit the properties of the resource with actual values that can create (or update) the resource. In order to understand what to add we can use the Azure Resource Explorer to navigate down to the storageAccounts node of the MSDN subscription where we created prmdataaastorageaccount:

In the right-hand pane of the explorer we can see the JSON that represents this concrete resource, and although the properties names don't always match exactly it should be fairly easy to see how the ‘live' values can be used as a guide to populating the ones in the deployment template:

So, back to the deployment template the following unassigned properties can be assigned the following values:

- "name": "prmdataiostorageaccount"

- "location": "West Europe"

- "accountType": "Standard_LRS"

The resulting JSON should be similar to:

|

|

{ "name": "prmdataiostorageaccount", "type": "Microsoft.Storage/storageAccounts", "location": "West Europe", "apiVersion": "2015-06-15", "dependsOn": [ ], "tags": { "displayName": "StorageAccount" }, "properties": { "accountType": "Standard_LRS" } } |

Save the template and switch to Create PRM-DAT.ps1 to run the deployment script which should create the storage account. You can verify this either via the portal or the explorer.

The next resource we'll create is a NetworkSecurityGroup, which has an extra twist in that at the time of writing adding it to the template isn't supported by the JSON Outline window. There's a couple of ways to go here -- either type the JSON by hand or use the Create function in the Azure Resource Explorer to generate some boilerplate JSON. This latter technique actually generates more JSON than is needed so in this case is something of a hindrance. I just typed the JSON directly and made use of the IntelliSense options in conjunction with the PRM-DAT-AAA network security group values via the Azure Resource Explorer. The JSON that needs adding is as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

{ "name": "PRM-DAT-AIO", "type": "Microsoft.Network/networkSecurityGroups", "location": "West Europe", "apiVersion": "2015-06-15", "tags": { "displayName": "NetworkSecurityGroup" }, "properties": { "securityRules": [ { "name": "default-allow-rdp", "properties": { "protocol": "Tcp", "sourcePortRange": "*", "destinationPortRange": "3389", "sourceAddressPrefix": "*", "destinationAddressPrefix": "*", "access": "Allow", "priority": 1000, "direction": "Inbound" } } ] } } |

Note that you'll need to separate this resource from the storage account resource with a comma to ensure the syntax is valid. Save the template, run the deployment and refresh the Azure Resource Explorer. You can now compare the new PRM-DAT-AIO and PRM-DAT-AAA network security groups in the explorer to validate the JSON that creates PRM-DAT-AIO. Note that by zooming out in your browser you can toggle between the two resources and see that it is pretty much just the etag values that are different.

The next resource to add is a public IP address. This can be added from the JSON Outline window using PublicIPAddress as the name but it also wants to add a reference to itself to a network interface which in turn wants to reference a virtual network. We are going to use an existing virtual network but we do need a network interface, so give the new network interface a name of NetworkInterface and the new virtual network can be any temporary name. As soon as the new JSON components have been added delete the virtual network and all of the variables and parameters that were added. All this makes sense when you do it -- trust me!

Once edited with the appropriate values the JSON for the public IP address should be as follows:

|

|

{ "name": "PRM-DAT-AIO", "type": "Microsoft.Network/publicIPAddresses", "location": "West Europe", "apiVersion": "2015-06-15", "tags": { "displayName": "PublicIPAddress" }, "properties": { "publicIPAllocationMethod": "Dynamic", "dnsSettings": { "domainNameLabel": "prm-dat-aio" } } } |

The edited JSON for the network interface should look similar to the code that follows, but note I've replaced my MSDN subscription GUID with an ellipsis.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

{ "name": "prm-dat-aio123", "type": "Microsoft.Network/networkInterfaces", "location": "West Europe", "apiVersion": "2015-06-15", "dependsOn": [ "[concat('Microsoft.Network/publicIPAddresses/', 'PRM-DAT-AIO')]", "[concat('Microsoft.Network/networkSecurityGroups/', 'PRM-DAT-AIO')]" ], "tags": { "displayName": "NetworkInterface" }, "properties": { "ipConfigurations": [ { "name": "ipconfig1", "properties": { "privateIPAllocationMethod": "Dynamic", "subnet": { "id": "/subscriptions/.../resourceGroups/PRM-COMMON/providers/Microsoft.Network/virtualNetworks/prmvirtualnetwork/subnets/default" }, "publicIPAddress": { "id": "/subscriptions/.../resourceGroups/PRM-DAT/providers/Microsoft.Network/publicIPAddresses/PRM-DAT-AIO" } } } ], "networkSecurityGroup": { "id": "/subscriptions/.../resourceGroups/PRM-DAT/providers/Microsoft.Network/networkSecurityGroups/PRM-DAT-AIO" } } } |

It's worth remembering at this stage that we're hard-coding references to other resources. We'll fix that up later on, but for the moment note that the network interface needs to know what virtual network subnet it's on (created in an earlier post), and which public IP address and network security group it's using. Also note the dependsOn section which ensures that these resources exist before the network interface is created. At this point you should be able to run the deployment and confirm that the new resources get created.

Finally we can add a Windows virtual machine resource. This is supported from the JSON Outline window, however this resource wants to reference a storage account and virtual network. The storage account exists and that should be selected, but once again we'll need to use a temporary name for the virtual network and delete it and the variables and parameters. Name the virtual machine resource VirtualMachine. Edit the JSON with appropriate hard-coded values which should end up looking as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 |

{ "name": "PRM-DAT-AIO", "type": "Microsoft.Compute/virtualMachines", "location": "West Europe", "apiVersion": "2015-06-15", "dependsOn": [ "[concat('Microsoft.Storage/storageAccounts/', 'prmdataiostorageaccount')]", "[concat('Microsoft.Network/networkInterfaces/', 'prm-dat-aio123')]" ], "tags": { "displayName": "VirtualMachine" }, "properties": { "hardwareProfile": { "vmSize": "Standard_DS1" }, "osProfile": { "computerName": "PRM-DAT-AIO", "adminUsername": "prmadmin", "adminPassword": "Mystrongpasswordhere9" }, "storageProfile": { "imageReference": { "publisher": "MicrosoftWindowsServer", "offer": "WindowsServer", "sku": "2012-R2-Datacenter", "version": "latest" }, "osDisk": { "name": "PRM-DAT-AIO", "vhd": { "uri": "https://prmdataiostorageaccount.blob.core.windows.net/vhds/PRM-DAT-AIO.vhd" }, "caching": "ReadWrite", "createOption": "FromImage" } }, "networkProfile": { "networkInterfaces": [ { "id": "/subscriptions/.../resourceGroups/PRM-DAT/providers/Microsoft.Network/networkInterfaces/prm-dat-aio123" } ] } } } |

Running the deployment now should result in a complete working VM which you can remote in to.

The final step before going any further is to tear-down the PRM-DAT resource group and check that a fully-working PRM-DAT-AIO VM is created. I added a Destroy PRM-DAT.ps1 file to my DeploymentScripts project with the following code:

|

|

# Authentication details are abstracted away in a PS module Set-AzureRmAuthenticationForMsdnEnterprise # Deletes all resources in the resource group!! Remove-AzureRmResourceGroup -Name PRM-DAT -Force |

Refactor the Template with Parameters, Variables and Functions

It's now time to make the template reusable by refactoring all the hard-coded values. Each situation is likely to vary but in this case my specific requirements are:

- The template will always create a Windows Server 2012 R2 Datacenter VM, but obviously the name of the VM needs to be specified.

- I want to restrict my VMs to small sizes to keep costs down.

- I'm happy for the VM username to always be the same so this can be hard-coded in the template, whilst I want to pass the password in as a parameter.

- I'm adding my VMs to an existing virtual network in a different resource group and I'm making a concious decision to hard-code these details in.

- I want the names of all the different resources to be generated using the VM name as the base.

These requirements gave rise to the following parameters, variables and a resource function:

- nodeName parameter -- this is used via variable conversions throughout the template to provide consistent naming of objects. My node names tend to be of the format used in this post and that's the only format I've tested. Beware if your node names are different as there are naming rules in force.

- nodeNameToUpper variable -- used where I want to ensure upper case for my own naming convention preferences.

- nodeNameToLower variable -- used where lower case is a requirement of ARM eg where nodeName forms part of a DNS entry.

- vmSize parameter -- restricts the template to creating VMs that are not going to burn Azure credits too quickly and which use standard storage.

- storageAccountName variable -- creates a name for the storage account that is based on a lower case nodeName.

- networkInterfaceName variable -- creates a name for the network interface based on a lower case nodeName with a number suffix.

- virtualNetworkSubnetName variable -- used to create the virtual network subnet which exists in a different resource group and requires a bit of construction work.

- vmAdminUsername variable -- creates a username for the VM based on the nodeName. You'll probably want to change this.

- vmAdminPassword parameter -- the password for the VM passed-in as a secure string.

- resourceGroup().location resource function -- neat way to avoid hard-coding the location in to the template.

Of course, these refactorings shouldn't affect the functioning of the template, and tearing down the PRM-DAT resource group and recreating it should result in the same resources being created.

What about Environments where Multiple VMs are Required?

The work so far has been aimed at creating just one VM, but what if two or more VMs are needed? It's a very good question and there are at least two answers. The first involves using the template as-is and calling New-AzureRmResourceGroupDeployment in a PowerShell Foreach loop. I've illustrated this technique in Create PRM-DQA.ps1 in the DeploymentScripts project. Whilst this works very nicely the VMs are created in series rather than in parallel and, well, who wants to wait? My first thought at creating VMs in parallel was to extend the Foreach loop idea with the -parallel switch in a PowerShell workflow. The code which I was hoping would work looks something like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

workflow Create-WindowsServer2012R2Datacenter { param ([string[]] $vms, [string] $resourceGroupName, [string] $solutionRoot) Foreach -parallel($vm in $vms) { # Authentication details are abstracted away in a PS module Set-AzureRmAuthenticationForMsdnEnterprise New-AzureRmResourceGroupDeployment -ResourceGroupName $resourceGroupName ` -TemplateFile "$solutionRoot\..\DeploymentTemplates\WindowsServer2012R2Datacenter.json" ` -Force ` -Verbose ` -Mode Incremental ` -TemplateParameterObject @{ nodeName = $vm; vmSize = 'Standard_DS1'; vmAdminPassword = 'MySuperSecurePassword' } } } $resourceGroupName = 'PRM-PRD' New-AzureRmResourceGroup -Name $resourceGroupName -Location westeurope -Force $virtualmachines = "$resourceGroupName-SQL", "$resourceGroupName-IIS" Create-WindowsServer2012R2Datacenter -vms $virtualmachines -resourceGroupName $resourceGroupName -solutionRoot $PSScriptRoot |

Unfortunately it seems like this idea is a dud -- see here for the details. Instead the technique appears to be to use the copy, copyindex and length features of ARM templates as documented here. This necessitates a minor re-write of the template to pass in and use an array of node names, however there are complications where I've used variables to construct resource names. At the time of publishing this post I'm working through these details -- keep an eye on my GitHub repository for progress.

Wrap-Up

Before actually wrapping-up I'll make a quick mention of the template's outputs node. A handy use for this is debugging, for example where you are trying to construct a complicated variable and want to check its value. I've left an example in the template to illustrate.