Deploy a Dockerized Application to Azure Kubernetes Service using Azure YAML Pipelines 4 – Running a Dockerized Application Locally

This is the fourth post in a series where I'm taking a fresh look at how to deploy a dockerized application to Azure Kubernetes Service (AKS) using Azure Pipelines after having previously blogged about this in 2018. The list of posts in this series is as follows:

- Getting Started

- Terraform Development Experience

- Terraform Deployment Pipeline

- Running a Dockerized Application Locally (this post)

- Application Deployment Pipelines

- Telemetry and Diagnostics

In this post I explain the components of the sample application I wrote to accompany this (and the previous) blog series and how to run the application locally. If you want to follow along you can clone / fork my repo here, and if you haven't already done so please take a look at the first post to understand the background, what this series hopes to cover and the tools mentioned in this post. Additionally, this post assumes you have created the infrastructure—or at least the Azure SQL dev database—described in the previous Terraform posts.

MegaStore Application

The sample application is called MegaStore and is about as simple as it gets in terms of a functional application. It's a .NET Core 3.1 application and the idea is that a sales record (beers from breweries local to me if you are interested) is created in the presentation tier which eventually gets persisted to a database via a message queue. The core components are:

- MegaStore.Web: a skeleton ASP.NET Core application that creates a ‘sales' record every time the home page is accessed and places it on a message queue.

- NATS message queue: this is an instance of the nats image on Docker Hub using the default configuration.

- MegaStore.SaveSaleHandler: a .NET Core console application that monitors the NATS message queue for new records and saves them to an Azure SQL database using EF Core.

When running locally in Visual Studio 2019 these application components work together using Docker Compose, which is a separate project in the Visual Studio solution. There are two configuration files in use which get merged together:

- docker-compose.yml: contains the configuration for megastore.web and megastore.savesalehandler which is common to running the application both locally and in the deployment pipeline.

- docker-compose.override.yml: contains additional configuration that is only needed locally.

There's a few steps you'll need to complete to run MegaStore locally.

Azure SQL dev Database

First configure the Azure SQL dev database created in the previous post. Using SQL Server Management Studio (SSMS) login to Azure SQL where Server name will be something like yourservername-asql.database.windows.net and Login and Password are the values supplied to the asql_administrator_login_name and asql_administrator_login_password Terraform variables. Once logged in create the following objects using the files in the repo's sql folder (use Ctrl+Shift+M in SSMS to show the Template Parameters dialog to add the dev suffix):

- A SQL login called sales_user_dev based on create-login-template.sql. Make a note of the password.

- In the dev database a user called sales_user and a table called Sale based on configure-database-template.sql.

Note: if you are having problems logging in to Azure SQL from SSMS make sure you have correctly set a firewall rule to allow your local workstation to connect.

Docker Environment File

Next create a Docker environment file to store the database connection string. In Visual Studio create a file called db-credentials.env in the docker-compose project. All on one line add the following connection string, substituting in your own values for the server name and sales_user_dev password:

|

|

DB_CONNECTION_STRING=Server=tcp:yourservername.database.windows.net,1433;Initial Catalog=dev;Persist Security Info=False;User ID=sales_user_dev;Password=yourpassword;MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30; |

Note: since this file contains sensitive data it's important that you don't add it to version control. The .gitignore file that's part of the repo is configured to ignore db-credentials.env.

Application Insights Key

In order to collect Application Insights telemetry from a locally running MegaStore you'll need to edit docker-compose.override.yml to contain the instrumentation key for the dev instance of the Application Insights resource that was created in the the previous post. You can find this in the Azure Portal in the Overview pane of the Application Insights resource:

I'll write more about Application Insights in a later post but in the meantime if you want to know more see this post from my previous 2018 series. It's largely the same with a few code changes for newer ways of doing things with updated NuGet packages.

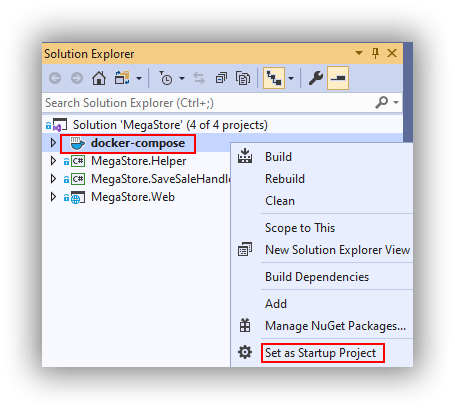

Set docker-compose as Startup

The startup project in Visual Studio needs to be set to docker-compose by right-clicking docker-compose in the Solution Explorer and selecting Set as Startup Project:

Up and Running

You should now be able to run MegaStore using F5 which should result in a localhost+port number web page in your browser. Docker Desktop will need to be running however I've noticed that newer versions of Visual Studio offer to start it automatically if required. Notice in Visual Studio the handy Containers window that gives some insight into what's happening:

In order to establish everything is working open SSMS and run select-from-sales.sql (in the sql folder in the repo) against the dev database. You should see a new ‘beer' sales record. If you want to create more records you can keep reloading the web page in your browser or run the generate-web-traffic.ps1 PowerShell snippet that's in the repo's pipeline folder making sure that the URL is something like http://localhost:32768/ (your port number will likely be different).

To view Application Insights telemetry (from the Azure Portal) whilst running MegaStore locally you may need to be aware of services running on you network that could cause interference. For me I could run Live Metrics and see activity in most of the graphs, however I initially couldn't use the Search feature to see trace and request telemetry (the screenshot is what I was expecting to see):

I initially thought this might be a firewall issue but it wasn't, and instead it turned out to be the pi-hole ad blocking service I have running on my network. It's easy to disable pi-hole for a few minutes or you can figure out which URL's need whitelisting. The bigger picture though is that if you don't see telemetry—particularly in a corporate scenario—you may have to do some investigation.

That's it for now! Next time we look at deploying MegaStore to AKS using Azure Pipelines.

Cheers -- Graham

Deploy a Dockerized ASP.NET Core Application to Azure Kubernetes Service Using a VSTS CI/CD Pipeline: Part 3

In this blog post series I'm working my way through the process of deploying and running an ASP.NET Core application on Microsoft's hosted Kubernetes environment. Formerly known as Azure Container Service (AKS), it has recently been renamed Azure Kubernetes Service, which is why the title of my blog series has changed slightly. In previous posts in this series I covered the key configuration elements both on a developer workstation and in Azure and VSTS and then how to actually deploy a simple ASP.NET Core application to AKS using VSTS. This is the full series of posts to date:

In this post I introduce MegaStore (just a fictional name), a more complicated ASP.NET Core application (in the sense that it has more moving parts), and I show how to deploy MegaStore to an AKS cluster using VSTS. Future posts will use MegaStore as I work through more advanced Kubernetes concepts. To follow along with this post you will need to have completed the following, variously from parts 1 and 2:

Introducing MegaStore

MegaStore was inspired by Elton Stoneman's evolution of NerdDinner for his excellent book Docker on Windows, which I have read and can thoroughly recommend. The concept is a sales application that rather than saving a ‘sale' directly to a database, instead adds it to a message queue. A handler monitors the queue and pulls new messages for saving to an Azure SQL Database. The main components are as follows:

- MegaStore.Web—an ASP.NET Core MVC application with a CreateSale method in the HomeController that gets called every time there is a hit on the home page.

- NATS message queue—to which a new sale is published.

- MegaStore.SaveSalehandler—a .NET Core console application that monitors the NATS message queue and saves new messages.

- Azure SQL Database—I recently heard Brendan Burns comment in a podcast that hardly anybody designing a new cloud application should be managing storage themselves. I agree and for simplicity I have chosen to use Azure SQL Database for all my environments including development.

You can clone MegaStore from my GitHub repository here.

In order to run the complete application you will first need to create an Azure SQL Database. The easiest way is probably to create a new database (also creates a server at the same time) via the portal and manage with SQL Server Management Studio. The high-level procedure is as follows:

- In the portal create a new database called MegaStoreDev and at the same time create a new server (name needs to be unique). To keep costs low I start with the Basic configuration knowing I can scale up and down as required.

- Still in the portal add a client IP to the firewall so you can connect from your development machine.

- Connect to the server/database in SSMS and create a new table called dbo.Sale:

|

|

SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO CREATE TABLE [dbo].[Sale]( [SaleID] [bigint] IDENTITY(1001,1) NOT NULL, [CreatedOn] [datetime] NOT NULL, [Description] [varchar](100) NOT NULL ) ON [PRIMARY] GO |

- In Security > Logins create a New Login called sales_user_dev, noting the password.

- In Databases > MegaStoreDev > Security > Users create a New User called sales_user mapped to the sales_user_dev login and with the db_owner role.

In order to avoid exposing secrets via GitHub the credentials to access the database are stored in a file called db-credentials.env which I've not committed to the repo. You'll need to create this file in the docker-compose project in your VS solution and add the following, modified for your server name and database credentials:

|

|

DB_CONNECTION_STRING=Server=tcp:megastore.database.windows.net,1433;Initial Catalog=MegaStoreDev;Persist Security Info=False;User ID=sales_user_dev;Password=mystrongpwd;MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30; |

If you are using version control make sure you exclude db-credentials.env from being committed.

With docker-compose set as the startup project and Docker for Windows running set to Linux containers you should now be able to run the application. If everything is working you should be able to see sales being created in the database.

To understand how the components are configured you need to look at docker-compose.yml and docker-compose-override.yml. Image building is handled by docker-compose.yml, which can't have anything else in it otherwise VSTS complains if you want to use the compose file to build the images. The configuration of the components is specified in docker-compose-override.yml which gets merged with docker-compose.yml at run time. Notice the k8s folder. This contains the configuration files needed to deploy the application to AKS.

By now you may be wondering if MegaStore should be running locally under Kubernetes rather than in Docker via docker-compose. It's a good question and the answer is probably yes. However at the time of writing there isn't a great story to tell about how Visual Studio integrates with Kubernetes on a developer workstation (ie to allow debugging as is possible with Docker) so I'm purposely ignoring this for the time being. This will change over time though, and I will cover this when I think there is more to tell.

Create Azure SQL Databases for Different Release Pipeline Environments

I'll be creating a release pipeline consisting of DAT and PRD environments. I explain more about these below but to support these environments you'll need to create two new databases—MegaStoreDat and MegaStorePrd. You can do this either through the Azure portal or through SQL Server Management Studio, however be aware that if you use SSMS you'll end up on the standard pricing tier rather than the cheaper basic tier. Either way, you then use SQL Server Management Studio to create dbo.Sale and set up security as described above, ensuring that you create different logins for the different environments.

Create a Build in VSTS

Once everything is working locally the next step is to switch over to VSTS and create a build. I'm assuming that you've cloned my GitHub repo to your own GitHub account however if you are doing it another way (your repo is in VSTS for example) you'll need to amend accordingly.

- Create a new Build definition in VSTS. The first thing you get asked is to select a repository—link to your GitHub account and select the MegaStore repo:

- When you get asked to Choose a template go for the Empty process option.

- Rename the build to something like MegaStore and under Agent queue select your private build agent.

- In the Triggers tab check Enable continuous integration.

- In the Options tab set Build number format to $(Date:yyyyMMdd)$(Rev:.rr), or something meaningful to you based on the available options described here.

- In the Tasks tab use the + icon to add two Docker Compose tasks and a Publish Build Artifacts task. Note that when configuring the tasks below only the required entries and changes to defaults are listed.

- Configure the first Docker Compose task as follows:

- Display name = Build service images

- Action = Build service images

- Azure subscription = [name of existing Azure Resource Manager endpoint]

- Azure Container Registry = [name of existing Azure Container Registry]

- Additional Image Tags = $(Build.BuildNumber)

- Configure the first Docker Compose task as follows:

- Display name = Push service images

- Azure subscription = [name of existing Azure Resource Manager endpoint]

- Azure Container Registry = [name of existing Azure Container Registry]

- Action = Push service images

- Additional Image Tags = $(Build.BuildId)

- Configure the Publish Build Artifacts task as follows:

- Display name = Publish k8s config

- Path to publish = k8s

- Artifact name = k8s-config

- Artifact publish location = Visual Studio Team Services/TFS

You should now be able to test the build by committing a minor change to the source code. The build should pass and if you look in the Repositories section of your Container Registry you should see megastoreweb and megastoresavesalehandler repositories with newly created images.

Create a DAT Release Environment in VSTS

With the build working it's now time to create the release pipeline, starting with an environment I call DAT which is where automated acceptance testing might take place. At this point there is a style choice to be made for creating Kubernetes Secrets and ConfigMaps. They can be configured from files or from literal values. I've gone down the literal values route since the files route needs to specify the namespace and this would require either a separate file for each namespace creating a DRY problem or editing the config files as part of the release pipeline. To me the literal values technique seems cleaner. Either way, as far as I can tell there is no way to update a Secret or ConfigMap via a VSTS Deploy to Kubernetes task as it's a two step process and the task can't handle this. The workaround is a task to delete the Secret or ConfigMap and then a task to create it. You'll see that I've also chosen to explicitly create the image pull secret. This is partly because of a bug in the Deploy to Kubernetes task however it also avoids having to repeat a lot of the Secrets configuration in Deploy to Kubernetes tasks that deploy service or deployment configurations.

- Create a new release definition in VSTS, electing to start with an empty process and rename it MegaStore.

- In the Pipeline tab click on Add artifact and link the build that was just created which in turn makes the k8s-config artifact from step 9 above available in the release.

- Click on the lightning bolt to enable the Continuous deployment trigger.

- Still in the Pipeline tab rename Environment 1 to DAT, with the overall changes resulting in something like this:

- In the Tasks tab click on Agent phase and under Agent queue select your private build agent.

- In the Variables tab create the following variables with Release Scope:

- AcrAuthenticationSecretName = prmcrauth (or the name you are using for imagePullSecrets in the Kubernetes config files)

- AcrName = [unique name of your Azure Container Registry, eg mine is prmcr]

- AcrPassword = [password of your Azure Container Registry from Settings > Access keys], use the padlock to make it a secret

- In the Variables tab create the following variables with DAT Scope:

- DatDbConn = Server=tcp:megastore.database.windows.net,1433;Initial Catalog=MegaStoreDat;Persist Security Info=False;User ID=sales_user;Password=mystrongpwd;MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30; (you will need to alter this connection string for your own Azure SQL server and database)

- DatEnvironment = dat (ie in lower case)

- In the Tasks tab add 15 Deploy to Kubernetes tasks and disable all but the first one so the release can be tested after each task is configured. Note that when configuring the tasks below only the required entries and changes to defaults are listed.

- Configure the first Deploy to Kubernetes task as follows:

- Display name = Delete image pull secret

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = delete

- Arguments = secret $(AcrAuthenticationSecret)

- Control Options > Continue on error = checked

- Configure the second Deploy to Kubernetes task as follows:

- Display name = Create image pull secret

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = create

- Arguments = secret docker-registry $(AcrAuthenticationSecretName) --namespace=$(DatEnvironment) --docker-server=$(AcrName).azurecr.io --docker-username=$(AcrName) --docker-password=$(AcrPassword) --docker-email=fred@bloggs.com (note that the email address can be anything you like)

- Configure the third Deploy to Kubernetes task as follows:

- Display name = Delete ASPNETCORE_ENVIRONMENT config map

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = delete

- Arguments = configmap aspnetcore.env

- Control Options > Continue on error = checked

- Configure the fourth Deploy to Kubernetes task as follows:

- Display name = Create ASPNETCORE_ENVIRONMENT config map

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = create

- Arguments = configmap aspnetcore.env --from-literal=ASPNETCORE_ENVIRONMENT=$(DatEnvironment)

- Configure the fifth Deploy to Kubernetes task as follows:

- Display name = Delete DB_CONNECTION_STRING secret

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = delete

- Arguments = secret db.connection

- Control Options > Continue on error = checked

- Configure the sixth Deploy to Kubernetes task as follows:

- Display name = Create DB_CONNECTION_STRING secret

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = create

- Arguments = secret generic db.connection --from-literal=DB_CONNECTION_STRING="$(DatDbConn)"

- Configure the seventh Deploy to Kubernetes task as follows:

- Display name = Delete MESSAGE_QUEUE_URL config map

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = delete

- Arguments = configmap message.queue

- Control Options > Continue on error = checked

- Configure the eighth Deploy to Kubernetes task as follows:

- Display name = Create MESSAGE_QUEUE_URL config map

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = create

- Arguments = configmap message.queue --from-literal=MESSAGE_QUEUE_URL=nats://message-queue-service.$(DatEnvironment):4222

- Configure the ninth Deploy to Kubernetes task as follows:

- Display name = Create message-queue service

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = apply

- Use Configuration files = checked

- Configuration File = $(System.DefaultWorkingDirectory)/_MegaStore/k8s-config/message-queue-service.yaml

- Configure the tenth Deploy to Kubernetes task as follows:

- Display name = Create megastore-web service

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = apply

- Use Configuration files = checked

- Configuration File = $(System.DefaultWorkingDirectory)/_MegaStore/k8s-config/megastore-web-service.yaml

- Configure the eleventh Deploy to Kubernetes task as follows:

- Display name = Create message-queue deployment

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = apply

- Use Configuration files = checked

- Configuration File = $(System.DefaultWorkingDirectory)/_MegaStore/k8s-config/message-queue-deployment.yaml

- Configure the twelfth Deploy to Kubernetes task as follows:

- Display name = Create megastore-web deployment

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = apply

- Use Configuration files = checked

- Configuration File = $(System.DefaultWorkingDirectory)/_MegaStore/k8s-config/message-queue-deployment.yaml

- Configure the thirteenth Deploy to Kubernetes task as follows:

- Display name = Update megastore-web with latest image

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = set

- Arguments = image deployment/megastore-web-deployment megastoreweb=$(AcrName).azurecr.io/megastoreweb:$(Build.BuildNumber)

- Configure the fourteenth Deploy to Kubernetes task as follows:

- Display name = Create megastore-savesalehandler deployment

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = apply

- Use Configuration files = checked

- Configuration File = $(System.DefaultWorkingDirectory)/_MegaStore/k8s-config/megastore-savesalehandler-deployment.yaml

- Configure the fifthteenth Deploy to Kubernetes task as follows:

- Display name = Update megastore-savesalehandler with latest image

- Kubernetes Service Connection = [name of Kubernetes Service Connection endpoint]

- Namespace = $(DatEnvironment)

- Command = set

- Arguments = image deployment/megastore-savesalehandler-deployment megastoresavesalehandler=$(AcrName).azurecr.io/megastoresavesalehandler:$(Build.BuildNumber)

That's a heck of a lot of configuration, so what exactly have we built?

The first eight tasks deal with the configuration that support the services and deployments:

- The image pull secret stores the credentials to the Azure Container Registry so that deployments that need to pull images from the ACR can authenticate.

- The ASPNETCORE_ENVIRONMENT config map sets the environment for ASP.NET Core. I don't do anything with this but it could be handy for troubleshooting purposes.

- The DB_CONNECTION_STRING secret stores the connection string to the Azure SQL database and is used by the megastore-savesalehandler-deployment.yaml configuration.

- The MESSAGE_QUEUE_URL config map stores the URL to the NATS message queue and is used by the megastore-web-deployment.yaml and megastore-savesalehandler-deployment.yaml configurations.

As mentioned above, a limitation of the VSTS Deploy to Kubernetes task means that in order to be able to update Secrets and ConfigMaps they need to be deleted first and then created again. This does mean that an exception is thrown the first time a delete task is run however the Continue on error option ensures that the release doesn't fail.

The remaining seven tasks deal with the deployment and configuration of the components (other than the Azure SQL database) that make up the MegaStore application:

- The NATS message queue requires a service so other components can talk to it and the deployment that specifies the specification for the image.

- The MegaStore.Web front end requires a service so that it is exposed to the outside world and the deployment that specifies the specification for the image.

- MegaStore.SaveSalehandler monitoring component only needs the deployment that specifies the specification for the image as nothing connects to it directly.

If everything has been configured correctly then triggering a release should result in a megastore-web-service being created. You can check the deployment was successful by executing kubectl get services --namespace=dat to get the external IP address of the LoadBalancer which you can paste in to a browser to confirm that the ASP.NET Core website is running. On the backend, you can use SQL Server Management Studio to connect to the database and confirm that records are being created in dbo.Sale.

If you are running in to problems, you can run the Kubernetes Dashboard to find out what is failing. Typically it's deployments that fail, and navigating to Workloads > Deployments can highlight the failing deployment. You can find out what the error is from the New Replica Set panel by clicking on the Logs icon which brings up a new browser tab with a command line style output of the error. If there is no error it displays any Console.WiteLine output. Very neat:

Create a PRD Release Environment in VSTS

With a DAT environment created we can now create other environments on the route to production. This could be whatever else is needed to test the application, however here I'm just going to create a production environment I'll call PRD. I described this process in my previous post so here I'll just list the high level process:

- Clone the DAT environment and rename it PRD.

- In the Variables tab rename the cloned DatDbConn and DatEnvironment variables (the ones with PRD scope) to PrdDbConn and PrdEnvironment and change their values accordingly.

- In the Tasks tab visit each task and change all references of $(DatDbConn) and $(DatEnvironment) to $(PrdDbConn) and $(PrdEnvironment). All Namespace fields will need changing and many of the tasks with use the Arguments fields will need attention.

- Trigger a build and check the deployment was successful by executing kubectl get services --namespace=prd to get the external IP address of the LoadBalancer which you can paste in to a browser to confirm that the ASP.NET Core website is running.

Wrapping Up

Although the final result is a CI/CD pipeline that certainly works there are more tasks than I'm happy with due to the need to delete and then recreate Secrets and ConfigMaps and this also adds quite a bit of overhead to the time it takes to deploy to an environment. There's bound to be a more elegant way of doing this that either exists now and I just don't know about it or that will exist in the future. Do post in the comments if you have thoughts.

Although I'm three posts in I've barely scratched the surface of the different topics that I could cover, so plenty more to come in this series. Next time it will probably be around health and / or monitoring.

Cheers—Graham