Continuous Delivery with TFS: Track Technical Debt with SonarQube

So far in this blog post series on building continuous delivery pipelines with the TFS ecosystem the focus on baking quality in to the application has centred mainly on static code analysis, unit tests and automated web tests. But just because you have no broken static code analysis rules and all your various types of tests are green isn't a guarantee that there aren't problems lurking in your codebase. On the contrary, you may well be unwittingly accumulating technical debt. And unless you go looking for it chances are you won't find out that you have a technical debt problem until it starts to cause you major problems.

For some years now the go-to tool for analysing technical debt has been SonarQube (formerly Sonar). However SonarQube hails from the open source world and it hasn't exactly been a seamless fit in to the C# and TFS world. All that changed around the time of Build 2015 with the announcement that Microsoft had joined forces with the makers of SonarQube to start to address the situation. The video from Build 2015 which tells the story is well worth watching. To coincide with this announcement the ALM Rangers published a SonarQube installation guide aimed at TFS users. I used this guide to assist me in writing this blog post to see how SonarQube can be set up to work with our continuous delivery pipeline. It's worth noting that the guide mentions that it's possible to use integrated security with the jTDS driver that SonarQube uses to connect to SQL Server but I struggled for several hours before throwing in the towel. Please share in the comments if you have had success in doing this. Another difference between the guide and my setup is that the guide uses the all-in-one Brian Keller VM whereas I'm installing on distributed VMs.

Create New SonarQube Items

SonarQube requires a running Java instance and needs quite a bit of horsepower so the recommendation is to run it on a dedicated server. You'll need to create the following:

- A new domain service account -- I created ALM\SONARQUBE.

- A new VM running in your cloud service -- I called mine ALMSONARQUBE. As always in a demo Azure environment there is a desire to preserve Azure credits so I created mine as a basic A4 running Windows Server 2012 R2. Ensure the server is joined to your domain and that you add ALM\SONARQUBE to the Local Administrators group.

Install SonarQube

The following steps should be performed on ALMSONARQUBE :

- Download and install a Java SE Runtime Environment appropriate to your VMs OS. There are myriad download options and it can be confusing to the untrained Java eye but on the index page look out for the JRE download button:

- Download and unblock the latest version of SonarQube from the downloads page. There isn't a separate download for Windows -- the zip contains files that allow SonorQube to run on a variety of operating systems. Unzip the download to a temp location and copy the bin, conf and other folders to an installation folder. I chose to create C:\SonarQube\Main as the root for the bin, conf and other folders however this is slightly at odds with the ALM guide where they have a version folder under the main SonarQube folder. As this is my first time installing SonarQube I'm not sure how upgrades are handled but my guess is that everything apart from the conf folder can be overwritten with a new version.

- At this point you can run C:\SonarQube\Main\bin\windows-x86-64\StartSonar.bat (you may have to shift right-click and Run as administrator) to start SonarQube against its internal database and browse to http://localhost:9000 on ALMSONARQUBE to confirm that the basic installation is working. To stop SonarQube simply close the command window opened by StartSonar.bat.

Confirm SQL Server Connectivity

If you are intending to connect to a remote instance of SQL Server I highly recommend confirming connectivity from ALMSONARQUBE as the next step:

- On the ALMSONARQUBE machine create a new text file somewhere handy and rename the extension to udl.

- Open this Data Link Properties file and you will be presented with the ability to make a connection to SQL Server via a dialog that will be familiar to most developers. Enter connection details that you know work and use Test Connection to confirm connectivity.

- Possible remedies if you don't have connectivity are:

- The domain firewall is on for either or both machines. Consider turning it off as I do in my demo environment or opening up port 1433 for SQL Sever.

- SQL Sever has not been configured for the TCP/IP protocol. Open Sql Server Configuration Manager [sic] and from SQL Server Network Configuration > Protocols for MSSQLSERVER enable the TCP/IP protocol. Note that you'll need to restart the SQL Server service.

Create a SonarQube Database

Carry out the following steps to create and configure a database:

- Create a new blank SQL Server database on 2008 or 2012 -- I created SonarQube. I created my database on the same instance of SQL Server that runs TFS and Release Management. That's fine in a demo environment but in a production environment where you may be using the complimentary SQL Server licence for running TFS that may cause a licensing issue.

- SonarQube needs the database collation to be case-sensitive (CS) and accent-sensitive (AS). You can actually set this when you create the database but if it needs doing afterwards right-click the database in SSMS and choose Properties. On the Options page change the collation to SQL_Latin1_General_CP1_CS_AS.

- Still in SSMS, create a new SQL Server login from Security > Logins, ensuring that the Default language is English. Under User Mapping grant the login access to the SonarQube database and grant access to the db_owner role.

- On ALMSONARQUBE navigate to C:\SonarQube\Main and open sonar.properties from the conf folder in Notepad or similar. Make the follwoing changes:

- Find and uncomment sonar.jdbc.username and sonar.jdbc.password and supply the credentials created in the step above.

- Find the Microsoft SQLServer 2008/2012 section and uncomment sonar.jdbc.url. Amend the connection string so it includes the name of the database server and the database. The final result should be something like sonar.jdbc.url=jdbc:jtds:sqlserver://ALMTFSADMIN/SonarQube;SelectMethod=Cursor.

- Now test connectivity by running SonarStart.bat and confirming that the database schema has been created and that browsing to http://localhost:9000 is still successful.

Run SonarQube as a Service

The next piece of the installation is to configure SonarQube as a Windows service:

- Run C:\SonarQube\Main\bin\windows-x86-64\InstallNTService.bat (you may have to shift right-click and Run as administrator) to install the service.

- Run services.msc and find the SonarQube service. Open its Properties and from the Log On tab change the service to log on as the ALM\SONARQUBE domain account.

- Again test all is working as expected by browsing to http://localhost:9000.

Configure for C#

With a working SonarQube instance the next piece of the jigsaw is to enable it to work with C#:

- Head over to the C# plugin page and download and unblock the latest sonar-csharp-plugin-X.Y.jar.

- Copy the sonar-csharp-plugin-X.Y.jar to C:\SonarQube\Main\extensions\plugins and restart the SonarQube service.

- Log in to the SonarQube portal (http://localhost:9000 or http://ALMSONARQUBE:9000 if on a remote machine) as Administrator -- the default credentials are admin and admin.

- Navigate to Settings > System > Update Center and verify that the C# plugin is installed:

Configure the SonarQube Server as a Build Agent

In order to integrate with TFS a couple of SonarQube components we haven't installed yet need access to a TFS build agent. The approach I've taken here is to have the build agent running on the actual SonarQube server itself. This keeps everything together and ensures that your build agents that might be servicing checkins are not bogged down with other tasks. From ALMSONARQUBE:

- Run Team Foundation Server Setup (typically by mounting the iso and running tfs_server.exe) and perform the install.

- At the Team Foundation Server Configuration Center dialog chose Configure Team Foundation Build Service > Start Wizard.

- Use the usual dialogs to connect to the appropriate Team Project Collection and then at the Build Services tab choose the Scale out build services option to add more build agents to the existing build controller on the TFS administration server.

- In the Settings tab supply the details of the domain service account that should be used to run the build agents.

- Install Visual Studio 2013.4 as it's the easiest way to get all the required bits on the build server.

- From within Visual Studio navigate to Tools > Extensions and Updates and then from the Updates tab update Microsoft SQL Server Update for database tooling.

- Update nuget.exe by opening an Administrative command prompt at C:\Program Files\Microsoft Team Foundation Server 12.0\Tools and running nuget.exe update -self.

- Finally, clone an existing Contoso University build definition that is based on the TfvcTemplate.12.xaml template, or create and configure a new build definition for Contoso University. I called mine ContosoUniversity_SonarQube. Queue a new build based on this template and make sure that the build is successful. You'll want to fix any glitches at this stage before proceeding.

Install the SonarQube Runner Component.

The SonarQube Runner is the is recommended as the default launcher to analyse a project with SonarQube. Installation to ALMSONARQUBE is as follows:

- Create a Runner folder in C:\SonarQube.

- Download and unlock the latest version of sonar-runner-dist-X.Y.zip from the downloads page.

- Unzip the contents of sonar-runner-dist-X.Y.zip to C:\SonarQube\Runner so that the bin, conf and lib folders are in the root.

- Edit C:\SonarQube\Runner\conf\sonar-runner.properties by uncommenting and amending as required the following values:

- sonar.host.url=http://ALMSONARQUBE:9000

- sonar.jdbc.url=jdbc:jtds:sqlserver://ALMTFSADMIN/SonarQube;SelectMethod=Cursor

- sonar.jdbc.username=SonarQube

- sonar.jdbc.password=$PasswordForSonarQube$

- Create a new system variable called SONAR_RUNNER_HOME with the value C:\SonarQube\Runner.

- Amend the Path system variable adding in C:\SonarQube\Runner\bin.

- Restart the build service -- the Team Foundation Server Administration Console is just one place you can to do this.

Integration with Team Build

In order to call the SonarQube runner from a TFS build definition a component called Sonar.MSBuild.Runner has been developed. This needs installing on ALMSONARQUBE is as follows:

- Create an MSBuild folder in C:\SonarQube.

- Download and unlock the latest version of SonarQube.MSBuild.Runner-X.Y.zip from the C# downloads page.

- Unzip the contents of SonarQube.MSBuild.Runner-X.Y.zip to C:\SonarQube\MSBuild so that the files are in the root.

- Copy SonarQube.Integration.ImportBefore.targets to C:\Program Files (x86)\MSBuild\12.0\Microsoft.Common.Targets\ImportBefore. (This folder structure may have been created as part of the Visual Studio installation. If not you will need to create it manually.)

- The build definition cloned/created earlier (ContosoUniversity_SonarQube) should be amended as follows:

- Process > 2.Build > 5. Advanced > Pre-build script arguments = /key:ContosoUniversity /name:ContosoUniversity /version:1.0

- Process > 2.Build > 5. Advanced > Pre-build script path = C:\SonarQube\MSBuild\SonarQube.MSBuild.Runner.exe

- Process > 3. Test > 2. Advanced > Post-test script path = C:\SonarQube\MSBuild\SonarQube.MSBuild.Runner.exe

- Configure the build definition for unit test results as per this blog post. Note though that Test assembly file specification should be set to **\*unittest*.dll;**\*unittest*.appx to avoid the automated web tests being classed as unit tests.

Show Time

With all the configuration complete it's time to queue a new build. If all is well you should see that the build report contains a SonarQube Analysis Summary section:

Clicking on the Analysis results link in the build report should take you to the dashboard for the newly created ContosoUniversity project in SonarQube:

This project was created courtesy of the Pre-build script arguments in the build definition (/key:ContosoUniversity /name:ContosoUniversity /version:1.0). If for some reason you prefer to create the project yourself the instructions are here. Do note that the dashboard is reporting 100% unit tests coverage only because my Contoso University sample action uses quick and dirty unit tests for demo purposes.

And Finally...

Between the ALM Rangers guide and the installation walkthrough above I hope you will find getting started with SonarQube and TFS reasonably straightforward. Do be aware that I found the ALM Ranger's guide to be a little confusing in places. There is the issue of integrated security with SQL Server that I never managed to crack and then a strange reference on page 22 about sonar-runner.properties not being needed after integrating with team build which had me scratching my head since how else can the components know how to connect to the SonarQube portal and database? It's early days though and I'm sure the documentation will improve and mature with time.

Performing the installation is just the start of the journey of course. There is a lot to explore, so do take time to work through the resources at sonarqube.org.

Cheers -- Graham

Continuous Delivery with TFS: Enable Test Impact Analysis

Test Impact Analysis is a feature that first appeared with Visual Studio / Microsoft Test Manager 2010 and provides for the ability to recommend tests that should be re-run in response to changes that have been made at the code level. It's a very useful feature but it does need some configuration before it can be used. In this post in my series on continuous delivery with TFS we look at the steps that need to be taken to enable TIA in our development pipeline.

Setting the Scene

The scenario I'm working with is where a new nightly build of the sample ASP.NET application has been deployed to the DAT stage and all of the automated Selenium web tests have passed. This now leaves the build ready to deploy in to the DQA stage so that any manual tests (including manual tests that have been automated to run from MTM) can be run from a browser on a client workstation. With TIA configured there are then at least two places to check for any tests that are recommended for running again.

Whilst working through the configuration for TIA I discovered that TIA doesn't seem to work in a multi-tenant web server, which is what I've set up for this blog post series to keep the number of VMs to a minimum. More correctly, I suspect that TIA doesn't work where there is a separate application pool for each website in a multi-tenant web server. I haven't investigated this thoroughly but it's something to bear in mind if you are trying to get TIA working and something I'll address in my future blog post series on continuous delivery with TFS 2015. The MSDN guidance for setting up TIA is here but it's slightly at odds with the latest version of MTM and in any case doesn't have all the details.

Create a Lab Center Environment and a Test Settings Configuration

As good a starting point as any for TIA configuration is to create a new environment in the MTM Lab Center. I covered this here so I won't go through all the steps again, however the environment needs to contain the web server that hosts the DQA stage's web site and it should be configured for the Web Server role.

Now move over to Test Settings and create a new entry -- I called mine Manual Test Run. Choose Manual for the What type of tests do you want to run? question and then in the Roles page select Web Server to join the Local role which is pre-selected and mandatory. In the Data and Diagnostics page the Local role needs to be configured for ASP.NET Client Proxy for IntelliTrace and Test Impact as a minimum and the Web Server role needs to be configured for Test Impact as a minimum. Additionally, after selecting Test Impact click on Configure at the far right. In the dialog's Advanced tab ensure Collect data from ASP.NET applications running on Internet Information Services is checked.

Configure the Test Plan

Either use an existing test plan or create a new one and in Testing Center > Plan > Contents add a new suite called Instructor. Create a new Test Case in Instructor called Can Navigate and then add the following steps:

- Launch IE

- Type URL and hit enter

- Click on Instructors

Now from Testing Center > Test > Run Tests run the Can Navigate test:

The aim of running the test this first time is to record each step so the whole test can be replayed on future runs. This sort of automation isn't to be confused with deep automation using tools such as Selenium or CodedUI, and is instead more akin to recording macros in Microsoft Office applications. Nevertheless, the technique is very powerful due to the repeatability it offers and is also a big time saver. The process of recording the steps is a little fiddly and I recommend you follow the MSDN documentation here. The main point to remember is to mark each step as passed after successful completion so that MTM correctly associates the action with the step. Hopefully the build steps are obvious, the aim being simply to display the list of instructors.

The final configuration step for the test plan is to configure Run Settings from Testing Center > Plan:

- Manual runs > Test settings = Manual Test Run (created above)

- Manual runs > Test environment = ALMWEB01 (created above, your environment name may differ)

- Builds > Filter for builds = ContosoUniversity_Main_Nightly (Ready for Deployment) (or whatever build you are using)

- Builds > Build in use = Latest build you have marked with the Build Quality of Ready for Deployment. (Build quality is arbitrary -- just needs to be consistent.)

Web Server Configuration

In order for TIA data to be collected on the web server there are several configuration steps to be completed:

- When environments are created by MTM Lab Center they are configured to use the Network Service account. We need to use the dedicated ALM\TFSTEST domain account (or whatever you have called yours) so on the web server run the Test Agent Configuration Tool and make the change. Additionally the TFSTEST account needs to be in the Local Administrators group on the web server.

- The domain account (ALM\CU-DQA) that is used as the identity for the application pool (CU-DQA) for the DQA website needs to have a local profile. You can either log on to the server as ALM\CU-DQA or use the runas /user:domain\name /profile cmd.exe command, supplying the appropriate credentials.

- The CU-DQA application pool needs to have the Load User Profile property set to True.

Web Client Configuration

The web client machine (I'm using Windows 8.1) needs to be running MTM and also has to have the Microsoft Test Agent installed. This should be configured with the ALM\TFSTEST account (which should be in the Local Administrators group) and be registered to the test controller.

Putting it All Together

With the configuration out of the way it's now time to generate TIA data. The Can Navigate test needs one successful run on the web client against the currently selected build in order to generate a baseline. As noted above it seems that TIA doesn't play nicely with multiple application pools and I found I needed to stop the CU-DAT and CU-PRD application pools before running the test.

- In MTM navigate to Testing Center > Test > Run Tests and run Can Navigate as earlier.

- The Test Runner will fire up and you should click Start Test.

- The Test Runner will display the steps of the test and the VCR style controls. From the Play dropdown choose Play all:

- When the test has successfully completed use the Mark test case result dropdown to mark the test as a Pass:

- A dialog will pop up advising that impact data is being collected and when it closes you should Save and Close the test.

Back at Testing Center > Test > Run Tests, in order to check that TIA data was generated click on View results with Can Navigate selected. In the attachments section you should see a file ending in testimpact.xml:

We now need to create a change to the method that displays the list of instructors. Typically this change will arise as part of fixing a bug however it will be sufficient here to fake the change in order to get TIA to work. To achieve this open up the Contoso University demo app and navigate to ContosoUniversity.Web > Controllers > InstructorController > Index. Make any non-breaking change -- I changed the OrderBy of the query that returns the instructors.

Check the code in to version control and then start a new build. If the build is successful you can confirm that Can Navigate is now flagged as an impacted test. Firstly you should see this in the build report either from Visual Studio or Team Web Access:

(Whilst you are examining the build report mark the Build Quality as Ready for Deployment, but note that you would typically do this after confirming a successful DAT stage). Secondly, in MTM navigate to Testing Center > Track > Recommended Tests. Change the Build in use to the build that has just passed and then change Previous build to compare to the build that was in use at the time of creating the baseline. A dialog should pop up advising that there may be tests that need to be re-run. After dismissing the dialog Can Navigate should be listed under Recommended tests:

Test Case Closed

As is often the case with continuous delivery pipeline work it seems like there is a great deal of configuration required to get a feature working and TIA is no exception. One valuable lesson is that whilst a multi-tenant web application configuration certainly saves on the number of VMs required for a demo environment it does cause problems and should almost certainly be avoided for an on-premises installation. I'll definitely be using separate web servers when I refresh my demo setup for TFS 2015. And when Windows Nano Server becomes available we won't be thinking twice about trying to save on the number of running VMs. Exciting times ahead...

Cheers -- Graham

Continuous Delivery with TFS: Configure Application Insights

If you get to the stage where you are deploying your application on a very frequent basis and you are relying on automated tests for the bulk of your quality assurance then a mechanism to alert you when things go wrong becomes crucial. You should have something in place anyway of course but in practice I suspect that application monitoring is either frequently overlooked or remains stubbornly on the to-do list.

A successful continuous delivery pipeline implementation shouldn't rely on the telephone or email as the alerting system and in this post in my blog series on implementing continuous delivery with TFS we look at how to integrate relevant parts of Microsoft's Application Insights (AI) tooling in to the pipeline. If you need to get up to speed with its capabilities I have a Getting Started blog post here. As a quick refresher AI is a suite of components that integrate with your application and servers and which sends telemetry to the Azure Portal. As a bonus, not only do you get details of diagnostic issues but also rich analytics on how you application is being used.

Big Picture

AI isn't just one component and in fact there are at least three main ways in which AI can be configured to provide diagnostic and analytic information:

- Adding the Application Insights SDK to your application.

- Installing Status Monitor on an IIS server.

- Creating Web Tests that monitor the availability of an HTTP endpoint available on the public Internet.

One key point to appreciate with AI and continuous delivery pipelines is that unless you do something about it AI will put the data it collects from the different stages of your pipeline in one ‘bucket' and you won't easily be able to differentiate what came from where. Happily there is a way to address this as we'll see below. Before starting to configure AI there are some common preparatory steps that need to be addressed so let's start with those.

Groundwork

If you have been following along with this series of blog posts you will be aware that so far we have only created DAT and DQA stages of the pipeline. Although not strictly necessary I created a PRD stage of the pipeline to represent production: if nothing else it's handy for demonstrations where your audience may expect to see the pipeline endpoint. I won't detail all the configuration steps here as they are all covered by previous blog posts however the whole exercise only took a few minutes. As things stand none of these stages exposes our sample web application to the public Internet however this is necessary for the creation of Web Tests. We can fix this in the Azure portal by adding an HTTP endpoint to the VM that runs IIS:

Our sample application is now available using a URL that begins with the cloud service name and includes the website name, for example http://mycloudservice.cloudapp.net/mywebapp. Be aware that this technique probably falls foul of all manner of security best practices however given that my VMs are only on for a few hours each week and it's a pure demo environment it's one I'm happy to live with.

The second item of groundwork is to create the containers that will hold the AI data for each stage of the pipeline. You will need to use the new Azure portal for this at https://portal.azure.com. First of all a disclaimer. There are several techniques at our disposal for segregating AI data as discussed in this blog post by Victor Mushkatin, and the comments of this post are worth reading as well since there are some strong opinions. I tried the tagging method but couldn't get it to work properly and as Victor says in the post this feature is at the early stages of development. In his post Victor creates a new Azure Resource for each pipeline stage however that seemed overly complicated for a demo environment. Instead I opted to create multiple Application Insights Resources in one Azure Resource group. As an aside, resource groups are fairly new to Azure and for any new Azure deployment they should be carefully considered as part of the planning process. For existing deployments you will find that your cloud service is listed as a resource group (containing your VMs) and I chose to use this as the group to contain the Application Insights Resources. Creating new AI resources is very straightforward. Start with the New button and then choose Developer Services > Application Insights. You'll need to provide a name and then use the arrow selectors to choose Application Type and Resource Group:

I created the following resource groups which represent the stages of my pipeline: CU-DEV, CU-DAT, CU-DQA and CU-PRD. What differentiates these groups is their instrumentation keys (often abbreviated to ikey). You'll need to retrieve the ikeys for each group and the way to do that in the new portal is via Browse > Filter By > Application Insights > $ResourceGroup$ > Settings > Properties where you will see the Instrumentation Key selector.

Add the Application Insights SDK to ContosoUniversity

We can now turn our attention to adding the Application Insights SDK to our Contoso University web application:

- Right-click your web project (ContosoUniversity.Web) within your Visual Studio solution and choose Add Application Insights Telemetry.

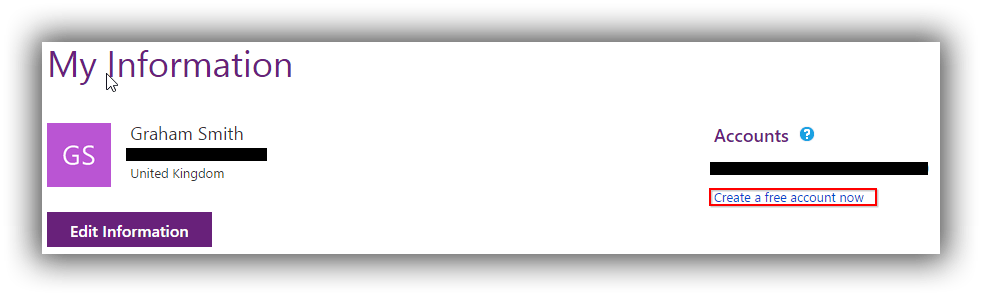

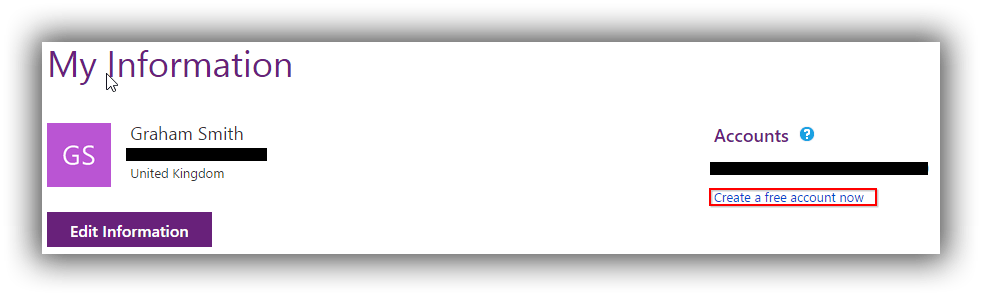

- The Add Application Insights to Project dialog opens and invites you to sign in to Azure:

- The first few times I tried to connect to Azure I got errors about not being able to find an endpoint but persistence paid off. I eventually arrived at a dialog that allowed me to choose my MSDN subscription via the Use different account link:

- Having already created my AI resources I used Configure settings to choose the CU-DEV resource:

- Back in the Add Application Insights to Project dialog click on the Add Application Insights to Project link to have Visual Studio perform all the necessary configuration.

At this stage we can run the application and click around to generate telemetry. If you are in Debug mode you can see this in the Output window. After a minute or two you should also see the telemetry start to appear in the Azure portal (Browse > Filter By > Application Insights > CU-DEV).

Configure AI in Contoso University for Pipeline Stages

As things stand deploying Contoso University to other stages of the pipeline will cause telemetry for that stage to be added to the CU-DEV AI resource group. To remedy this carry out the following steps:

- Add an iKey attribute to the appSettings section of Web.config:

|

|

<appSettings> <!-- Other settings here --> <add key="iKey" value="8fc11978-dd5b-7b87-addc-965329534108"/> </appSettings> |

- Add a transform to Web.Release.config that consists of a token (__IKEY__) that can be used by Release Management:

|

|

<appSettings> <add key="iKey" value="__IKEY__" xdt:Transform="SetAttributes" xdt:Locator="Match(key)"/> </appSettings> |

- Add the following code to Application_Start in Global.asax.cs:

|

|

Microsoft.ApplicationInsights.Extensibility.TelemetryConfiguration.Active.InstrumentationKey = System.Web.Configuration.WebConfigurationManager.AppSettings["iKey"]; |

- As part of the AI installation Views.Shared._Layout.cshtml is altered with some JavaScript that adds the iKey to each page. This isn't dynamic and the JavaScript instrumentationKey line needs altering to make it dynamic as follows:

|

|

instrumentationKey: "@Microsoft.ApplicationInsights.Extensibility.TelemetryConfiguration.Active.InstrumentationKey" |

- Remove or comment out the InstrumentationKey section in ApplicationInsights.config.

- In the Release Management client at Configure Apps > Components edit the ContosoUniversity\Deploy Web Site component by adding an IKEY variable to Configuration Variables.

- Still in the Release Management client open the Contoso University\DAT>DQA>PRD release template from Configure Apps > Agent-based Release Templates and edit each stage supplying the iKey value for that stage (see above for how to get this) to the newly added IKEY configuration variable.

After completing these steps you should be able to deploy your application to each stage of the pipeline and see that the Web.config of each stage has the correct iKey. Spinning up the website for that stage and clicking around in it should cause telemetry to be sent to the respective AI resource.

Install Status Monitor on the IIS server

The procedure is quite straightforward as follows:

- On your IIS server (ALMWEB01 if you are following the blog series) download and run the Status Monitor installation package from here.

- With the installation complete you'll need to sign in to your Microsoft Account after which you'll be presented with a configuration panel where the CU-DAT, CU-DQA and CU-PRD websites should have been discovered. The control panel lets you specify a separate AI resource for each website after which you'll need to restart IIS:

- In order to ensure that the domain accounts that the websites are running under have sufficient permissions to collect data make sure that they have been added to the Performance Monitor Users Windows local group.

With this configuration complete you should click around in the websites to confirm that telemetry is being sent to the Azure portal.

Creating Web Tests to monitor HTTP Availability

The configuration for Web Tests takes place in the new Azure portal at https://portal.azure.com. There are two types of test -- URL ping and a more involved Multi-step test. I'm just describing the former here as follows:

- In the new portal navigate to the AI resource you want to create tests for and choose the Availability tile:

- This opens the Web Tests pane where you choose Add web test:

- In the Create test pane supply a name and a URL and then use the arrow on Test Locations to choose locations to test from:

After clicking Create you should start to see data being generated within a few seconds.

In Conclusion

AI is clearly a very sophisticated solution for providing rich telemetry about your application and the web server hosting and I'm exited about the possibilities it offers. I did encounter a few hurdles in getting it to work though. Initial connection to the Azure portal when trying to integrate the SDK with Contoso University was the first problem and this caused quite a bit of messing around as each failed installation had to be undone. I then found that with AI added to Contoso University the build on my TFS server failed every time. I'm using automatic package restore and I could clearly see what's happening: every AI NuGet package was being restored correctly with the exception of Microsoft.ApplicationInsights and this was quite rightly causing the build to fail. Locally on my development machine the package restore worked flawlessly. The answer turned out to be an outdated nuget.exe on my build server. The fix is to open an Administrative command prompt at C:\Program Files\Microsoft Team Foundation Server 12.0\Tools and run nuget.exe update -self. Instant fix! This isn't AI's fault of course, although it is a mystery why one of the AI NuGets brought this problem to light.

Cheers -- Graham

Getting Started with Application Insights

If the latest release of your application has a problem chances are you would prefer to know before your users flood your inbox or start complaining on social media channels. Additionally it is probably a good idea to monitor what your users get up to in your application to help you prioritise future development activities. And so to the world of diagnostics and analytics software. There are offerings from several vendors to consider in this area but as good a place to start as any is Microsoft's Application Insights. Here is a list of resources to help you understand what is can do for you:

At the time of writing this post Application Insights is in public preview -- see here for details. Do bear in mind that it's a chargeable service with full pricing due to take effect in June 2015.

Cheers -- Graham

Continuous Delivery with VSO: Executing Automated Web Tests with Microsoft Test Manager

In this fourth post in my series on continuous delivery with VSO we take a look at executing automated web tests with Microsoft Test Manager. There are quite a few moving parts involved in getting all this working so it's worth me explaining the overall aim before diving in with the specifics.

Overview

The tests we want to run are automated web tests written using the Selenium framework. I first wrote these tests for my Continuous Delivery with TFS blog posts series and you can read about how to create the tests here and how run run them using MTM and TFS here. The goal in this post is to run these tests using MTM and VSO, triggered as part of the DAT stage of the pipeline from RM. The tests are run from a client workstation that is configured with MTM (a requirement at the time of writing) and the Microsoft Test Agent. I've used Selenium's Firefox driver in the test code so Firefox is also required on the client machine.

In terms of what actually happens, firstly RM copies the complete build over to the client workstation and then executes a PowerShell script that runs TCM.exe which is a command-line utility that lets you run tests that are part of a test plan. Precisely what happens next is under the bonnet stuff but it's along the lines of the test controller is informed that there is work to be done and that in turn informs the test agent on the client machine that it needs to run tests. The test agent knows from the test plan which tests to run and in which DLL they live and has access to the DLLs in the local copy of the build folder. Each test first starts Firefox and then connects to the web server running the deployed Contoso University and performs the automation specified in the test.

In many ways the process of getting all this to work with VSO rather than TFS is very similar and because of that I don't go in to every detail in this post and instead refer back to my TFS blog post.

Configure a Test Controller

VSO doesn't offer a test controller facility so you'll need to configure this yourself. If you have a test controller already in use then it's simplicity itself to repurpose it to point to your VSO account using the Browse button. If you are starting from scratch see here for the details but obviously ensure you connect to VSO rather than TFS. One other difference is that in order to get past some permissions problems I found it necessary to specify credentials for the lab service account -- I used the same as the service logon account.

Although I started off by repurposing an existing controller, because of permissions problems I ended up creating a dedicated build and test server as I wanted to start with a clean sheet. One thing I found was that the Visual Studio Test Controller service wouldn't automatically start after booting the OS from the Stopped (deallocated) state. The application error log was clearly reporting that the test controller wasn't able to connect to VSO. Manually starting the service was fine so presumably there was some sort of timing issue with other OS components not being ready.

Configure Microsoft Test Manager

If MTM isn't already installed on your development workstation then that's the first step. The second step is to connect MTM to your VSO account. I already had MTM installed and when I went to connect it to VSO the website was already listed. If that's not the case you can use the Add server link from the Connect to Your Team Project dialog. Navigating down to your Team Project (ContosoUniversity) enables the Connect now link which then takes you to a screen that allows you to choose between Testing Center and Lab Center. Choose the latter and then configure Lab Center as per the instructions here.

Continue following these instructions to configure Testing Centre with a new test plan and test cases. Note that you need to have the Contoso University solution open in order to associate the actual tests with the test cases. You'll also need to ensure that when deployed the tests navigate to the correct URL. In the Contoso University demo application this is hard-coded and you need to make the change in Driver.cs located in the ContosoUniversity.Web.SeFramework project.

Configure a Web Client Test Machine

The client test machine needs to be created in the cloud service that was created for DAT and joined to the domain if you are using one. The required configuration is very similar to that required for TFS as described here with the exception that the Release Management Deployment Agent isn't required and nor is the RMDEPLOYER account. Getting permissions correctly configured on this machine proved critical and I eventually realised that the Windows account that the tests will run under needs to be configured so that MTM can successfully connect to VSO with the appropriate credentials. To be clear, these are not the test account credentials themselves but rather the normal credentials you use to connect to VSO. To configure all this, once the test account has been added to the Local Administrators group and MTM has been installed and the licence key applied you will need to log on to Windows as the test account and start MTM. Connect to VSO and supply your VSO credentials in the same way as you did for your development workstation and and verify that you can navigate down to the Contoso University team project and open the test plan that was created in the previous section.

Initially I also battled with getting the test agent to register correctly with the test controller. I eventually uninstalled the test agent (which I had installed manually) and let the test controller perform the install followed by the configuration. Whether that was the real solution to the problem I don't know but it got things working for me.

Executing TCM.exe with PowerShell

As mentioned above the code that starts the tests is a PowerShell script that executes TCM.exe. As a starting point I used the script that Microsoft developed for agent-based release templates but had to modify it to make it work with RM-VSO. In particular changes were made to accommodate the way variables are passed in to the script (some implicit such as $TfsUrl or $TeamProject and some explicit such as $PlanId or $SuiteId) and to remove the optional build definition and build number parameters which are not available to the vNext pipeline and caused errors when specified on the TCM.exe command line. The modified script (TcmExecvNext.ps1) and the original Microsoft script for comparison (TcmExec.ps1) are available in a zip here and TcmExecvNext.ps1 should be copied to the Deploy folder in your source control root. One point to note is that for agent-based pipelines to TFS Collection URL is passed as $TfsUrlWithCollection however in vNext pipelines it is passed in as $TfsUrl.

Configure Release Management

Because we are using RM-VSO this part of the configuration is completely different from the instructions for RM-TFS. However before starting any new configuration you'll need to make a change to the component we created in the previous post. This is because TCM.exe doesn't seem to like accepting the name of a build folder if it has a space in it. Some more fiddling with PowerShell might have found a solution but I eventailly changed the component's name from Drop Folder to DropFolder. Note that you'll need to visit the existing action and reselect the newly named component. Another issue which cropped-up is that TCM.exe choked when the build directory parameter was supplied with a local file path. The answer was to create a share at C:\Windows\DtlDownloads\DropFolder and configure with appropriate permissions.

The new configuration procedure for RM-VSO is as follows:

- From Configure Paths > Environments link the web client test machine to the DAT environment.

- From Configure Apps > vNext Release Templates open Contoso University\DAT>DQA.

- From the Toolbox drag a Deploy Using PS/DSC action to the deployment sequence to follow Deploy Web and Database and rename the action Run Automated Web Tests.

- Open up the properties of Run Automated Web Tests and set the Configuration Variables as follows:

- ServerName = choose the name of the web client test machine from the dropdown.

- UserName = this is the test domain account (ALM\TFSTEST in my case) that was configured for the web client test machine.

- Password = password for the UserName

- ComponentName = choose DropFolder from the dropdown.

- PSScriptPath = Deploy\TcmExecvNext.ps1

- SkipCaCheck = true

- Still in the properties of Run Automated Web Tests and set the Custom configuration as follows:

- PlanId = 8 (or whatever your Plan ID is as it is likely to be different)

- SuiteId = 10 (or whatever your Suite ID is as it is likely to be different)

- ConfigId = 1 (or whatever your Configuration ID is as it is likely to be different)

- BuildDirectory = \\almclientwin81b\DtlDownloads\DropFolder (your machine name may be different)

- TestEnvironment = ALMCLIENTWIN81B (yours may be different)

- Title = Automated Web Tests

Bearing in mind that the Deploy Using PS/DSC action doesn't allow itself to be resized to show all configuration values the result should look something like this:

Start a Build

From Visual Studio manually queue a new build from your build definition. If everything is in place the build should succeed and you can open Microsoft Test Manager to check the results. Navigate to Testing Center > Test > Analyze Test Runs. You should see your test run listed and double-clicking it will hopefully show the happy sight of passing tests:

Testing Times

As I noted in the TFS version of this post there are a lot of moving parts to get configured and working in order to be able to trigger tests to run from RM. Making all this work with VSO took many hours working through all the details and battling with permissions problems and myriad other things that didn't work in the way I was expecting them to. With luck I've hopefully captured all the details you need to try this in your own environment. If you do encounter difficulties please post in the comments and I'll do what I can to help.

Cheers -- Graham

Continuous Delivery with VSO: Application Deployment with Release Management

In the previous post in my blog series on implementing continuous delivery with VSO we got as far as configuring Release Management with a release path. In this post we cover the application deployment stage where we'll create the items to actually deploy the Contoso University application. In order to achieve this we'll need to create a component which will orchestrate copying the build to a temporary location on target nodes and then we'll need to create PowerShell scripts to actually install the web files to their proper place on disk and run the DACPAC to deploy any database changes. Note that although RM supports PowerShell DSC I'm not using it here and instead I'm using plain PowerShell. Why is that? It's because for what we're doing here -- just deploying components -- it feels like an unnecessary complication. Just because you can doesn't mean you should...

Sort out Build

The first thing you are going to want to sort out is build. VSO comes with 60 minutes of bundled build which disappears in no time. You can pay for more by linking your VSO account to an Azure subscription that has billing activated or the alternative is to use your own build server. This second option turns out to be ridiculously easy and Anthony Borton has a great post on how to do this starting from scratch here. However if you already have a build server configured it's a moment's work to reconfigure it for VSO. From Team Foundation Server Administration Console choose the Build Configuration node and select the Properties of the build controller. Stop the service and then use the familiar dialogs to connect to your VSO URL. Configure a new controller and agent and that's it!

Deploying PowerShell Scripts

The next piece of the jigsaw is how to get the PowerShell scripts you will write to the nodes where they should run. Several possibilities present themselves amongst which is embedding the scripts in your Visual Studio projects. From a reusability perspective this doesn't feel quite right somehow and instead I've adopted and reproduced the technique described by Colin Dembovsky here with his kind permission. You can implement this as follows:

- Create folders called Build and Deploy in the root of your version control for ContosoUniversity and check them in.

- Create a PowerShell script in the Build folder called CopyDeployFiles.ps1 in and add the following code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

Param( [string]$srcPath = $env:TF_BUILD_SOURCESDIRECTORY, [string]$binPath = $env:TF_BUILD_BINARIESDIRECTORY, [string]$pathToCopy ) try { $sourcePath = "$srcPath\$pathToCopy" $targetPath = "$binPath\$pathToCopy" if (-not(Test-Path($targetPath))) { mkdir $targetPath } xcopy /y /e $sourcePath $targetPath Write-Host "Done!" } catch { Write-Host $_ exit 1 } |

- Check CopyDeployFiles.ps1 in to source control.

- Modify the process template of the build definition created in a previous post as follows:

2.Build > 5. Advanced > Post-build script arguments = -pathToCopy Deploy

2.Build > 5. Advanced > Post-build script path = Build/CopyDeployFiles.ps1

To explain, Post-build script path specifies that CopyDeployFiles.ps1 created above should be run and Post-build script arguments feeds in the -pathToCopy argument which is the Deploy folder we created above. The net effect of all this is that the Deploy folder and any contents gets created as part of the build.

Create a Component

In a multi-server world we'd create a component in RM from Configure Apps > Components for each server that we need to deploy to since a component is involved in ensuring that the build is copied to the target node. Each component would then be associated with an appropriately named PowerShell script to do the actual work of installing/copying/running tests or whatever is needed for that node. Because we are hosting IIS and SQL Server on the same machine we only actually need one component. We're getting ahead of ourselves a little but a side effect of this is that we will use only one PowerShell script for several tasks which is a bit ugly. (Okay, we could use two components but that would mean two build copy operations which feels equally ugly.)

With that noted create a component called Drop Folder and add a backslash (\) to Source > Builds with application > Path to package. The net effect of this when the deployment has taken place is the existence a folder called Drop Folder on the target node with the contents of the original drop folder copied over to the remote folder. As long as we don't need to create configuration variables for the component it can be reused in this basic form. It probably needs a better name though.

Create a vNext Release Template

Navigate to Configure Apps > vNext Release Templates and create a new template called Contoso University\DAT>DQA based on the Contoso University\DAT>DQA release path. You'll need to specify the build definition and check Can Trigger a Release from a Build. We now need to create the workflow on the DAT design surface as follows:

- Right-click the Components node of the Toolbox and Add the Drop Folder component.

- Expand the Actions node of the Toolbox and drag a Deploy Using PS/DSC action to the Deployment Sequence. Click the pen icon to rename to Deploy Web and Database.

- Double click the action and set the Configuration Variables as follows:

- ServerName = choose the appropriate server from the dropdown.

- UserName = the name of an account that has permissions on the target node. I'm using the RMDEPLOYER domain account that was set up for Deployment Agents to use in agent based deployments.

- Password = password for the UserName

- ComponentName = choose Drop Folder from the dropdown.

- SkipCaCheck = true

- The Actions do not display very well so a complete screenshot is not possible but it should look something like this (note SkipCaCheck isn't shown):

At this stage we can save the template and trigger a build. If everything is working you should be able to examine the target node and observe a folder called C:\Windows\DtlDownloads\Drop Folder that contains the build.

Deploy the Bits

With the build now existing on the target node the next step is to actually get the web files in place and deploy the database. We'll do this from one PowerShell script called WebAndDatabase.ps1 that you should create in the Deploy folder created above. Every time you edit this and want it to run do make sure you check it in to version control. To actually get it to run we need to edit the Deploy Web and Database action created above. The first step is to add Deploy\WebAndDatabase.ps1 as the parameter to the PSScriptPath configuration variable. We then need to add the following custom configuration variables by clicking on the green plus sign:

- destinationPath = C:\inetpub\wwwroot\CU-DAT

- websiteSourcePath = _PublishedWebsites\ContosoUniversity.Web

- dacpacName = ContosoUniversity.Database.dacpac

- databaseServer = ALMWEBDB01

- databaseName = CU-DAT

- loginOrUser = ALM\CU-DAT

The first section of the script will deploy the web files to C:\inetpub\wwwroot\CU-DAT on the target node, so create this folder if you haven't already. Obviously we could get PowerShell to do this but I'm keeping things simple. I'm using functions in WebAndDatabase.ps1 to keep things neat and tidy and to make debugging a bit easier if I want to only run one function.

The first function is as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

function copy_web_files { if ([string]::IsNullOrEmpty($destinationPath) -or [string]::IsNullOrEmpty($websiteSourcePath) -or [string]::IsNullOrEmpty($databaseServer) -or [string]::IsNullOrEmpty($databaseName)) { $(throw "A required parameter is missing.") } Write-Verbose "#####################################################################" -Verbose Write-Verbose "Executing Copy Web Files with the following parameters:" -Verbose Write-Verbose "Destination Path: $destinationPath" -Verbose Write-Verbose "Website Source Path: $websiteSourcePath" -Verbose Write-Verbose "Database Server: $databaseServer" -Verbose Write-Verbose "Database Name: $databaseName" -Verbose $sourcePath = "$ApplicationPath\$websiteSourcePath" Remove-Item "$destinationPath\*" -recurse Write-Verbose "Deleted contents of $destinationPath" -Verbose xcopy /y /e $sourcePath $destinationPath Write-Verbose "Copied $sourcePath to $destinationPath" -Verbose $webDotConfig = "$destinationPath\Web.config" (Get-Content $webDotConfig) | Foreach-Object { $_ -replace '__DATA_SOURCE__', $databaseServer ` -replace '__INITIAL_CATALOG__', $databaseName } | Set-Content $webDotConfig Write-Verbose "Tokens in $webDotConfig were replaced" -Verbose } copy_web_files |

The code clears out the current set of web files and then copies the new set over. The tokens in Web.config get changed in the copied set so the originals can be used for the DQA stage. Note how I'm using Write-Verbose statements with the -Verbose switch at the end. This causes the RM Deployment Log grid to display a View Log link in the Command Output column. Very handy for debugging purposes.

The second function deploys the DACPAC:

|

|

function deploy_dacpac { if ([string]::IsNullOrEmpty($dacpacName) -or [string]::IsNullOrEmpty($databaseServer) -or [string]::IsNullOrEmpty($databaseName)) { $(throw "A required parameter is missing.") } Write-Verbose "#####################################################################" -Verbose Write-Verbose "Executing Deploy DACPAC with the following parameters:" -Verbose Write-Verbose "DACPAC Name: $dacpacName" -Verbose Write-Verbose "Database Server: $databaseServer" -Verbose Write-Verbose "Database Name: $databaseName" -Verbose $cmd = "& 'C:\Program Files (x86)\Microsoft SQL Server\120\DAC\bin\sqlpackage.exe' /a:Publish /sf:'$ApplicationPath'\'$dacpacName' /tcs:'server=$databaseServer; initial catalog=$databaseName'" Invoke-Expression $cmd | Write-Verbose -Verbose } deploy_dacpac |

The code is simply building the command to run sqlpackage.exe -- pretty straightforward. Note that the script is hardcoded to SQL Server 2014 -- more on that below.

The final function deals with the Create login and database user.sql script that lives in the Scripts folder of the ContosoUniversity.Database project. This script ensures that the necessary SQL Server login and database user exists and is tokenised so it can be used in different stages -- see this article for all the details.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

function run_create_login_and_database_user { if ([string]::IsNullOrEmpty($loginOrUser) -or [string]::IsNullOrEmpty($databaseName)) { $(throw "A required parameter is missing.") } Write-Verbose "#####################################################################" -Verbose Write-Verbose "Executing Run Create Login and Database User with the following parameters:" -Verbose Write-Verbose "Login or User: $loginOrUser" -Verbose Write-Verbose "Database Server: $databaseServer" -Verbose Write-Verbose "Database Name: $databaseName" -Verbose $scriptName = "$ApplicationPath\Scripts\Create login and database user.sql" (Get-Content $scriptName) | Foreach-Object { $_ -replace '__LOGIN_OR_USER__', $loginOrUser ` -replace '__DB_NAME__', $databaseName } | Set-Content $scriptName Write-Verbose "Tokens in $scriptName were replaced" -Verbose $cmd = "& 'sqlcmd' /S $databaseServer /i '$scriptName' " Invoke-Expression $cmd | Write-Verbose -Verbose Write-Verbose "$scriptName was executed against $databaseServer" -Verbose } run_create_login_and_database_user |

The tokens in the SQL script are first swapped for passed-in values and then the code builds a command to run the script. Again, pretty straightforward.

Loose Ends

At this stage you should be able to trigger a build and have all of the components deploy. In order to fully test that everything is working you'll want to create and configure a web application in IIS -- this article has the details.

To create the stated aim of an initial pipeline with both a DAT and DQA stage the final step is to actually configure all of the above for DQA. It's essentially a repeat of DAT so I'm not going to describe it here but do note that you can copy and paste the Deployment Sequence:

One remaining aspect to cover is the subject of script reusability. With RM-TFS there is an out-of-the-box way to achieve reusability with tools and actions. This isn't available in RM-VSO and instead potential reusability comes via storing scripts outside of the Visual Studio solution. This needs some thought though since the all-in-one script used above (by necessity) only has limited reusability and in a non-demo environment you would want to consider splitting the script and co-ordinating everything from a master script. Some of this would happen anyway if the web and database servers were distinct machines but there is probably more that should be done. For example, tokens that are to be swapped-out are hard-coded in the script above which limits reusability. I've left it like that for readability but this certainly feels like the sort of thing that should be improved upon. In a similar vein the path to sqlpackage.exe is hard coded and thus tied to a specific version of SQL Server and probably needs addressing.

In the next post we'll look at executing automated web tests. Meantime if you have any thoughts on great ways to use PowerShell with RM-VSO please do share in the comments.

Cheers -- Graham

Blogging with WordPress

If you're the sort of IT professional who prefers to actively manage their career rather than just accept what comes along then chances are that deciding to blog could be one of the best career decisions you ever make. Technical blogging is a great focal point for all your learning efforts, a lasting way to give back to the community, a showcase for your talents to potential employers and a great way to make contact with other people in your field. Undecided? Seems most people initially feel that way. Try reading this, this and this.

If you do decide to make the leap one of the best sources of inspiration that I have found is John Sonmez's Free Blogging Course. It's packed full of tips on how to get started and keep going and since there is nothing to lose I wholeheartedly recommend signing up. This is just the beginning though, and it turns out that this is the start of many practical decisions you will need to make in order to turn out good quality blog posts that reach as wide an audience as possible. I thought I'd document my experience here to give anyone just starting out a flavour of what's involved.

Which Blogging Platform?

Once you have decided to blog the first question is likely to be which blogging platform to go for. The top hits for a best blogging platform Google search invariably recommend WordPress and since there seemed no point in ignoring the very advice I had sought that's what I chose. There's more to it than that though since there is a choice of WordPress.com and WordPress.org. The former allows you to host a blog for free at WordPress.com however there are some restrictions on customisation and complications with domain name. WordPress.org on the other hand is a software package that anyone can host and is the full customisable version. If you are an IT professional you are almost certainly going to want all the flexibility and despite the fact that modest costs are involved for me WordPress.org was the clear winner.

Hosting WordPress

Next up is where are you going to host WordPress? With super fast broadband and technical skills some people might choose to host themselves at home but on my rural 1.8 Mbps connection that wasn't an option. I started down the Microsoft Azure route and actually got a working WordPress up-an-running with my own private Azure account. Whilst I would have enjoyed the flexibility it would have given me I decided that cost was prohibitive as I wanted a PaaS solution which means paying for the Azure Website and a hosted MySQL database. It might be cheaper now so don't discount this option if Azure appeals, however I found the costs of a web hosting company to be much more reasonable. There are lots of these and I chose one off the back of a computer magazine review. Obviously you need a company that hosts WordPress at a price you are happy to pay and which offers the level of support you need.

What's in a name?

If you are looking to build a brand and market yourself then your domain name will be pretty important. It could be an amusing twist on your view or niche in the technology world or perhaps your name if it's interesting enough (mine isn't). My core interest is in the technology and processes around deploying software so pleasereleaseme tickled my fancy although I know the reference doesn't make sense to every culture.

As if choosing a second-level domain name is hard enough you also need to choose the top-level. Of course to some extent your choice might be limited by what's available, what makes sense or how much you're willing to pay. I chose .net since I have a background as a Microsoft.NET developer. Go figure!

Getting Familiar

I'm assuming that most people reading this blog post are technically minded and so I'm not going to go through the process of getting your WordPress site and domain name up-and-running, and in any case it will vary according to who you choose as a host. It's worth noting though that some companies might do some unwanted low-level configuration to the ‘template' used to create your WordPress site and if you need to reverse any of that you may need to use an FTP tool such as FileZilla to assist with the file editing process. In my case I found I couldn't edit themes and it turned out I need to make a change to wp-config (which lives in the file system) to turn this on.

With your site now live I recommend taking some time to familiarise yourself with WordPress and go through all the out-of-the-box configuration settings before you start installing any plugins. In particular Settings > General and Settings > Permalinks are two areas to check before you start writing posts. In the former don't forget to set your Site Title and a catchy Tagline. Permalinks in particular is very important for ensuring search engine friendliness. See here for more details but the take home seems to be to use Post name.

One of the bigger decisions you'll need to make is which theme to go for (Appearance > Themes). There's oodles of choice but do choose carefully as your theme will say a lot about your blog. If you find a free one then great however there is a cottage industry in paid-for themes if you can't. I'm not at all a flashy type of person so my theme is one of muted tones. It's not perfect but for free who can argue? One day I will probably ask if the author can make some changes for appropriate remuneration. In the meantime I use Appearance > Edit CSS (see Jetpack below) to make a few tweaks to my site after the theme stylesheet has been processed.

Fun with Widgets

In addition to displaying your pages and posts WordPress can also display Widgets which appear to the right of your main content. I change widgets around every so often but as a minimum always have a Text widget with some details about me, widgets from Jetpack (see below) so people can follow me via Twitter, email and RSS, and also the Tag Cloud widget.

Planning Ahead

There's still a little more to do before you begin writing posts but this probably is a good point to start planning how you will use posts and pages and categories and tags. At the risk of stating the obvious posts are associated with a publish date whilst pages are not. Consequently pages are great for static content and posts for, er, posts. The purpose of categories and tags is perhaps slightly less obvious. The best explanation I have come across is that categories are akin to the table of contents in a book and tags are akin to the index. The way I put all this together is by having themes for my posts. Some themes are tightly coupled for example my Continuous Delivery with TFS soup-to-nuts series of posts, or less so for example my Getting Started series. I use categories to organise my themes and I also use pages as index pages for each category. It's a bit of extra maintenance but useful to be able to link back to them. Using tags then becomes straightforward and I have a different tag for each technology I write about and posts will often have several tags.

Backing Up

Please do get a backup strategy in place before you sink too much effort in to configuring your site and writing posts. It's probably not enough to rely on your web hosting provider's arrangements and I thoroughly recommend implementing your own supplementary backup plan. There are plenty of plugins that will manage backup for you -- some free and some paid. To cut a long research story short I use BackUpWordPress which is free if all you want to do is backup to your web space. You don't of course -- you want to copy your backups to an offline location. For this I use their BackUpWordPress To Google Drive extension which costs USD 24 per year. It does what it ways on the tin and there are other flavours. Please don't skimp on backup and getting your backups to an offsite location!

Beating Spam

If you have comments turned on (and you probably should to get feedback and make connections with people who are interested in your posts) you will get a gazillion spam comments. As far as I can see the Akismet plugin is the way to go here. Don't forget to regularly clean out your spam (Comments > Spam). Your instinct will probably be to want to go through spam manually the first few times you clean it out but Akismet is so good that I don't bother any more and just use the Empty Spam button.

Search Engine Optimisation

This is one of those topics that is huge and makes my head hurt. In short it's all about trying to make sure your posts are ranked highly by the search engines when someone performs a search. For the long story I recommend reading some of the SEO guides that are out there -- I found this guide by Yoast in particular to be very useful. To help cope with the complexities there are several plugins that can manage SEO for you. I ended up choosing the free version of WordPress SEO by Yoast since the plugin and the guide complement each other. There is some initial one-time setup to perform such as registering with the Google and Bing webmaster tools and verifying your site and submitting an XML sitemap , after which it's a case of making sure each post is as optimised for SEO as it can be. The plugin guides you through everything and there is a paid-for version if you need more.

Tracking Visitors

In order to understand who is visiting your site you will want to sign up for a Google Analytics account. You then need to insert the code in to every page on your site and as you would expect a plugin can do this for you. There's a few to choose from and I went for Google Analytics Dashboard for WP.

Install Jetpack

Jetpack is a monster pack of ‘stuff' from WordPress.com that can help you with all sorts of things big and small. Some items are enabled in your site as soon as you install Jetpack such as the Edit CSS feature mentioned above and others become available when you link it to a WordPress.com account. There is far too much to cover here and it's a case of trawling through and working out what suits your needs. To give you a flavour though:

- Enhanced Distribution -- shares published content with third party services such as search engines

- Extra Sidebar Widgets -- give you extra sidebar widgets

- Monitor -- checks your site for downtime and emails you if there is a problem

- Photon -- loads your post images from WordPress.com's content delivery network

- Protect -- guards against brute force attacks

- Publicize -- allows you to connect your blog to popular social networking sites and automatically share new posts

- Shortcode Embeds -- allow you to embed media from other sites such as YouTube

- WP.me Shortlinks -- gives you a Get Shortlink button in the post editor

- WordPress.com Stats -- collects statistics about your site visitors similar to Google Analytics

These are just a few of the options I've enabled -- there are many, many more. Could keep you busy for hours...

Image Matters

Although blogging is about words a picture can apparently paint a thousand of them and as a technical blogger you are undoubtedly going to want to include screenshots of applications in your posts. I spent quite some time researching this but ultimately decided to go with one of Scott Hanselman's recommendations and chose WinSnap. It's a paid for offering but you can use it on as many machines as you need and it's very feature-rich. I do most of my work via remote desktop connections and pretty much all of the time use WinSnap from an instance installed on my host PC. I do have it installed on the main machine I remote to but making it work to capture menu fly-outs and so on directly on the remote machine is a work in progress. Whichever tool you choose please do try to take quality screenshots -- Scott has a guide here. I frequently find that my mouse is in the screenshot or that I've picked up some background at the edge of a portion of a dialog. I always discard these and start again. Don't forget to protect any personal data, licence keys and the like.

Code Quality

If you are bloggging about a technology that involves programming code you'll soon realise that the built-in WordPress feature for displaying code is lacking and that you need something better. As always there are several plugins that can come to the rescue -- here is just one review. I tried a couple and decided that Crayon Syntax Highlighter was the one for me. Whichever plugin you choose do take some time to understand all the options and do some experimenting to ensure your readers get the best experience.

Writing Quality Posts

Your blog says a lot about you and for that reason you probably want to pay close attention to the quality of your writing. I don't mean that you should get stressed over this and never publish anything, after all one of your reasons for blogging might be to improve your writing skills. Rather, pay attention to the basics so that they are a core feature of every post and then you'll have solid foundations to improve on. Here's a list of some of the things to consider:

- Try not to let spelling mistakes to slip through. Browsers have spell checkers and highlighting these days -- do use them.

- Proofread your posts before publishing -- and after. I'm forever writing form when I mean from and spell checkers don't catch this. WordPress has a preview feature and Jetpack has a Proofreading module -- why not try it out?

- Try to adopt a consistent formatting style that uses white space and headings. Watch out for any extra white space that might creep in between paragraphs. Use the Text pane of the editor to check the HTML if necessary.

- Watch out for extra spaces at the start of paragraphs (they creep in somehow) and also for double spaces.

- If you are unsure about a word, phrase or piece of grammar Google for it to find out how it should be used or how others have used it, but only trust a reputable source or common consensus. If I have any nagging doubt I never assume I am right and will always check. I've been surprised more than a few times to find out that what I thought was correct usage was wrong.

- Technical writing can be quite difficult because you need to refer to elements of an application as you describe how to do something, and you need to distinguish these from the other words in your sentences. I use bold to flag up the actions a reader needs to take if they are ‘following along at home' and usually also at the first mention of a core technology component and the like. have a look at some of my posts to see what I mean.

- In my first career as a research scientist my writing (for academic journals for example) was strictly in the third person and quite formal. That doesn't work at all well for blogs where you want to write directly to the user. For most ‘how-to' blogs second person is probably best with a bit of first person thrown in on occasions and that's the style I use. Have a look here for more explanation.

If you are serous about improving your written work there are plenty of books and web pages you can read. Many years ago when I was an undergraduate at the University of Wales, Bangor, there was an amazing guide to writing called The Style and Presentation of Written Work by Agricultural and Forest Sciences lecturer Colin Price. I read it over and over again and it still stands me in good stead today. My paper copy has long since vanished but I was thrilled to find it available here. The focus is on academic writing but it's packed full of useful tips for everyone and well worth reading.

Marketing your Blog

So, your blog is up-and-running and you are putting great effort in to writing quality posts that you are hoping will be of use to others in your area. Initially you might be happy with the trickle of users finding your site but then you'll write a post that takes over 10 hours of research and writing and you'll wish you had more traffic for your efforts.

Say hello to the mysterious world of marketing your blog. I was -- and to some extent still am -- uneasy about all this however there is something satisfying about seeing your Google Analytics statistics go up. So how does it work? I'm still learning but here are some of the techniques I'm using and which you might want to try:

- Answering questions on MSDN forums and StackOverflow. Create and maintain your profiles on these forums and when answering questions link to one of your blog posts if it's genuinely helpful. Answering questions is also a great way to understand where others are having problems and where a timely blog post might help.

- Comment on other bloggers' posts. I follow about 70 blogs and several times a week there might be an opportunity to comment and link back to a post you have written.

- Link to the CodeProject if you are writing a blog that fits that site -- instructions on how to link here. Once connected the quick way to have a post consumed is to have a WordPress category called CodeProject and use it in the post.

- Use Jetpack's Publicize module to automatically post your blogs to Twitter, Facebook and LinkedIn and Google+. I'm still working out the value of doing this -- my family are bemused -- but it's automatic so what the heck? In all seriousness if you are looking to promote your blog in a big way then social media is probably going to be a big thing for you.

That's pretty much where I have got to on my blogging with WordPress journey to date. Looking back it's been a huge enjoyment getting all this configured and sorted out. Concepts that were once very hazy are now a little less so and I have learned a huge amount. I'm sure I've missed lots of important bits out and maybe you have your own thoughts as to which plugins are must-haves. Do share through the comments!

Cheers -- Graham

Getting Started with PowerShell DSC

Whenever I explain to people the common failure points for the deployment of an application I'll often draw a triangle. One point is for application code, another for application configuration and the other for server configuration. (Of course there are plenty of other ways for a deployment to fail but if it's because the power to your server room has failed you have a different class of problem.) Minimising the chances of application code being the culprit starts with good coding practices such as appropriate use of design patterns, test driven development or similar -- the list goes on and everyone will have their view. This continues with practising continuous integration and deploying code to a delivery pipeline using a tool such as Release Management for Visual Studio that can manage an application's configuration between environments. But how to manage server configuration? In many organisations initial sever configuration is typically done by hand -- possibly using a build list. Over time tweaks are made by different technicians until eventually the server becomes a work of art: a one-off that nobody could reliably reproduce.

The answer to all this is tooling that implements configuration as code. Typically this means declaring in a code file what you want a server's configuration to look like and then leaving some other component to figure out how to achieve that -- and to correct any deviations that might occur. This is in contrast to an imperative code build script where you would prescribe what would happen but where you would have to take care of error handling and other factors that could cause issues.